It is not news to anyone that AI workloads occasion dramatic surges in compute demands. But there is an even bigger price to pay. Behind the hype and excitement, the hidden truth that IT shops partaking in the AI race know all too well is that the workloads also effect fivefold increase in storage, and massive swells in energy consumption.

The AI Data Snowball Effect

“There is nary an area of personal life that isn’t already touched by AI,” remarks Ace Stryker, Director of Market Development at Solidigm, at the recent AI Field Day event in California.

AI has penetrated every sector, and enterprises are bending over backwards to guarantee a rich customer experience.

To make room for AI workloads, big tech companies have announced infrastructure overhauls. Smaller companies too are following their footsteps, expanding infrastructures, on occasions, rebuilding them.

Stryker points out that AI is not monolithic, and these details are dictated by the deployment size and the applications, but one can’t look away from the fact that AI models have become more complex and sophisticated over time, and as a consequence, applications have diversified across lines of businesses.

The bigger AI models are now in the range of trillion parameters, and the data being fed into them has grown commensurately.

“Datasets are growing logarithmically”, says Stryker. “If you’re doing a large language model, a lot of those are built on the common crawl corpus which are web scrapes every three or four months. They’ve been doing it since 2008. Today, the total body of text is 13 or 15 petabytes and rising.”

AI Needs More than Just Capacity

The massive increase in data volume prompts investing in bigger and bolder storage solutions, but there are more considerations besides space. You can always pay for extra capacity, but a better storage makes an infinitely smarter addition to organizations’ bourgeoning AI efforts.

A white paper published by Meta and Stanford University states that storage consumes 35% of the entire server power footprint. That’s not an insignificant number. AI models run on expensive accelerators like GPUs. The bigger the model, the bigger the real estate. Storage feeds data directly into these GPUs giving them the raw material for compute. Any delay in the transfer snowballs the total cost of ownership (TCO) without producing desired outcome.

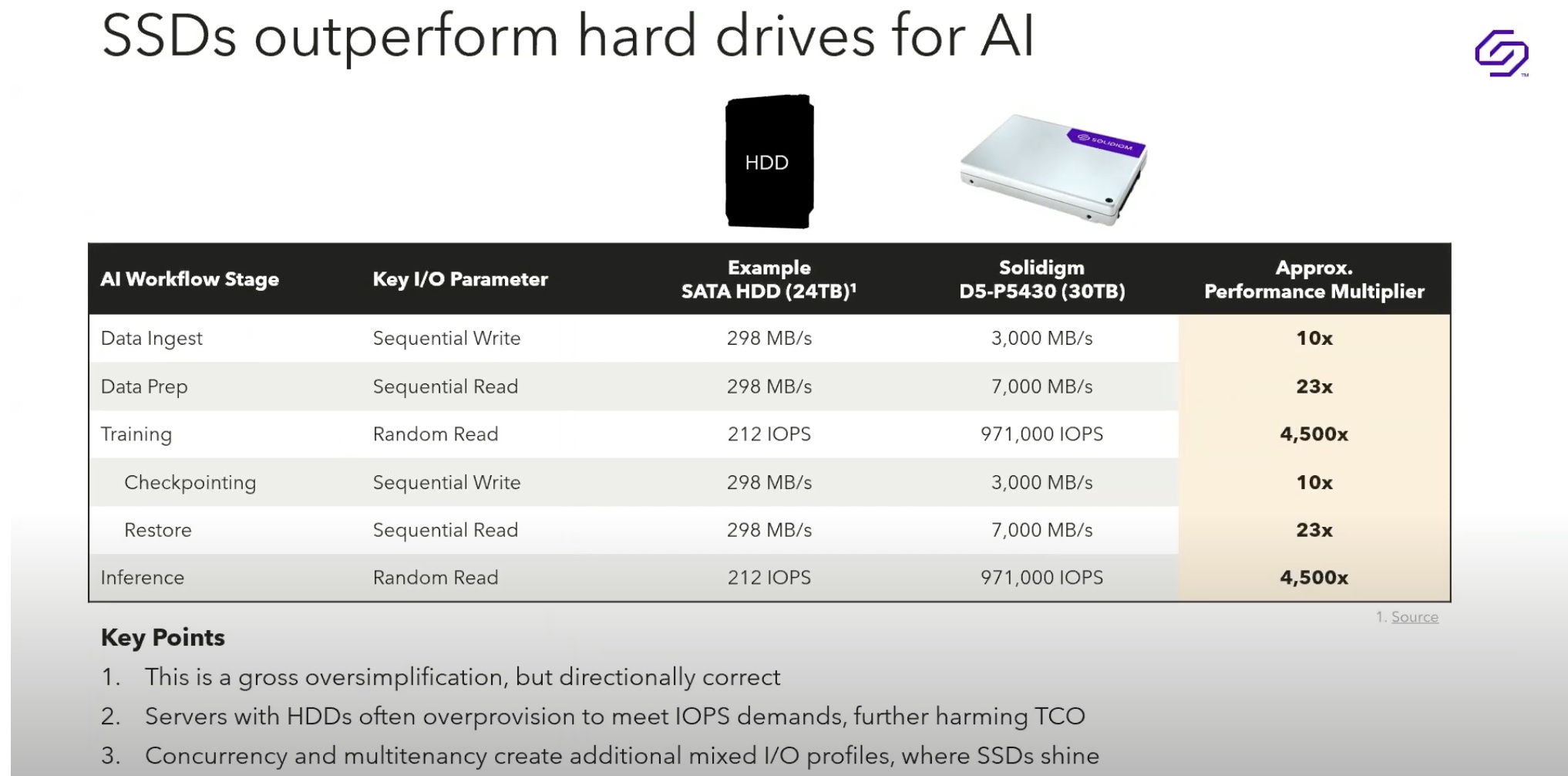

The stages in which AI happens behind the scenes look something like this. Raw data is culled from various sources and written to the drives. This data is cleaned, normalized and tokenized through an ETL process, before being presented to the model for training.

To make sure that the model can be rolled back to a good previous state in the event of failure, checkpointing is performed periodically. Inputs are fed into the trained models in the inferencing stage.

Across these workflow stages, storage demands rise and fall owing to the workloads’ varying I/O profiles. Some are write-heavy, while others are read-intensive.

Not only do companies need a storage that is affordable to handle this tremendous data influx, but they need one that is souped up for intense performance. This storage can significantly ramp up model development, by enhancing GPU utilization through speedy delivery of data into GPUs.

Currently, HDDs account for the bulk of storage hardware in core datacenters. Big on capacity, these are less expensive and offer access times measured in milliseconds. But HDDs’ bandwidth and latency cannot support the high I/O demands of the AI workflow. Drives running tasks like training, checkpointing and data prepping, in parallel across multiple pipelines, require to be primed for concurrency and multi-tenancy.

To make up, storage teams overprovision the drives, leading to acquisition of more drives to meet the capacity needs.

High-Density QLC Storage

Solidigm’s goal is to deliver an industry-leading storage portfolio to the market that not only offers flexible scaling and operational efficiency from core to edge, but also makes AI storage cheaper. Partnering with companies like Supermicro, CodeWeavers, VAST Data and NVIDIA, Solidigm is designing groundbreaking solutions that can set a course all on their own.

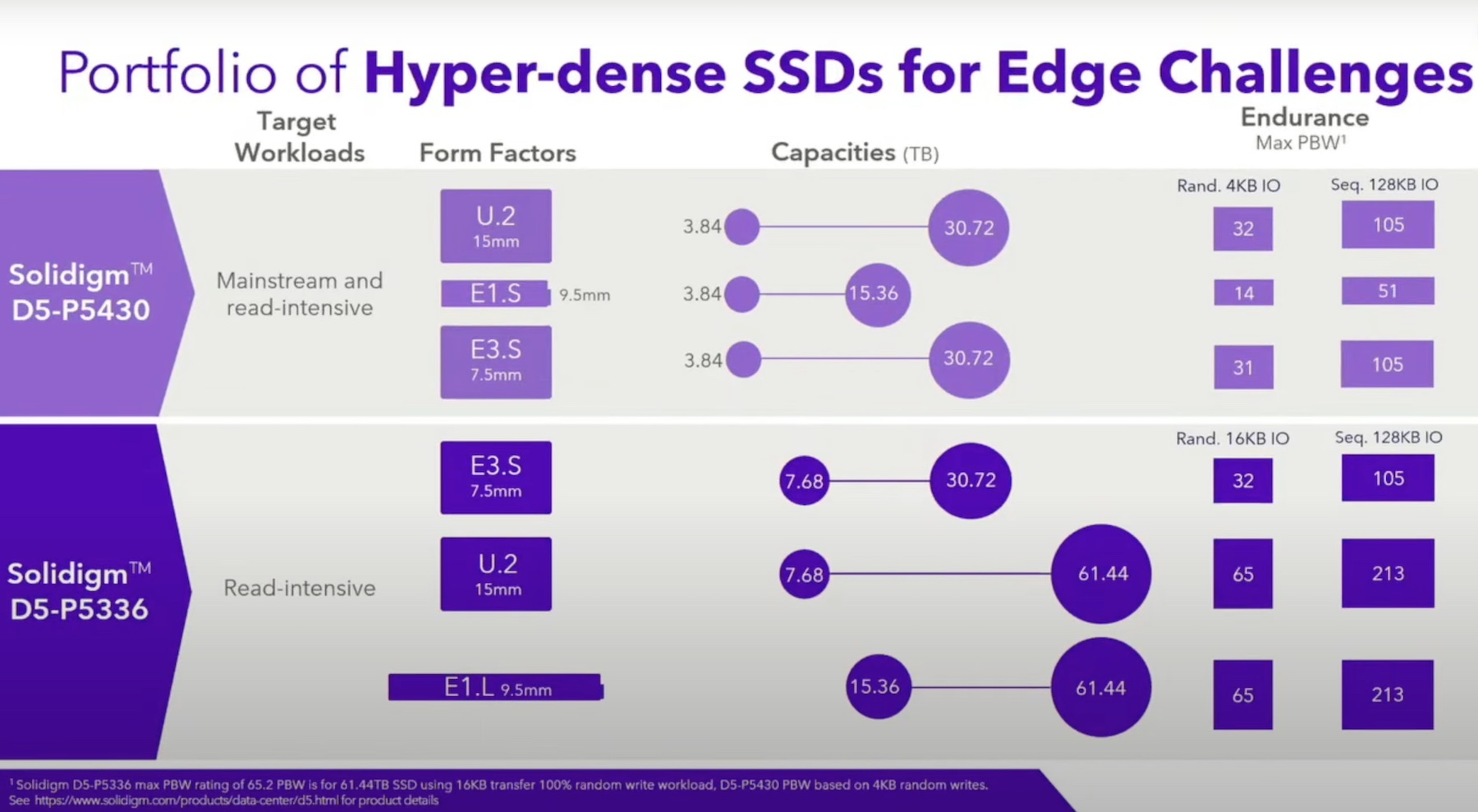

Solidigm’s solutions are built with deep capacities and power optimizations. The D5-P5336 comes with a capacity of 61TB, fitted inside a slight 2.5” form factor. Because of their slim forms and high density, datacenters require up to 5.2 times fewer drivers, which amount to 9 times fewer servers, and 9 times smaller rack footprints.

The top-tier storage solutions on Solidigm’s portfolio provide the best performance numbers for massive-scale AI deployments. Data shows that for a 10PB AI data pipeline solution, the D5-P5336 high-density QLC SSDs provide 4.3 times lower energy costs compared to an all-HDD array that translates to a 46% lower TCO across 5 years.

“You’re saving on your server footprint, your datacenter footprint, and your power envelope as well. All of these things feed into great cost savings over the life of the hardware,” says Stryker.

Performance is amplified by the Cloud Storage Acceleration Layer (CSAL) which is an open-source write shaping software developed by Solidigm. “What it enables us to do is take incoming writes and direct them intelligently to one device or another. It appears to the system as one device, but under the hood, CSAL is essentially directing traffic,” he explains.

To achieve max performance and GPU utilization, Solidigm recommends using a combination of the Solidigm D7 – P5810 SLC SSDs for high combined read/write performance, and the D5-P5336 for capacity. This will obviously be topped with CSAL or any equivalent software that can help maintain the high application write performance.

Be sure to watch Solidigm’s presentations from the recent AI Field Day event to get a technical deep-dive. Also check out other Gestalt IT coverage on Solidigm for experts’ insights.