Intel rocked the world earlier this year with the release of their 3rd Generation Xeon Scalable processors, designed specifically for advancing compute capabilities of servers. In their exclusive Tech Field Day event surrounding the new release, Intel gave an overview of how the Xeon platform is changing the AI edge game.

Getting AI to the Edge

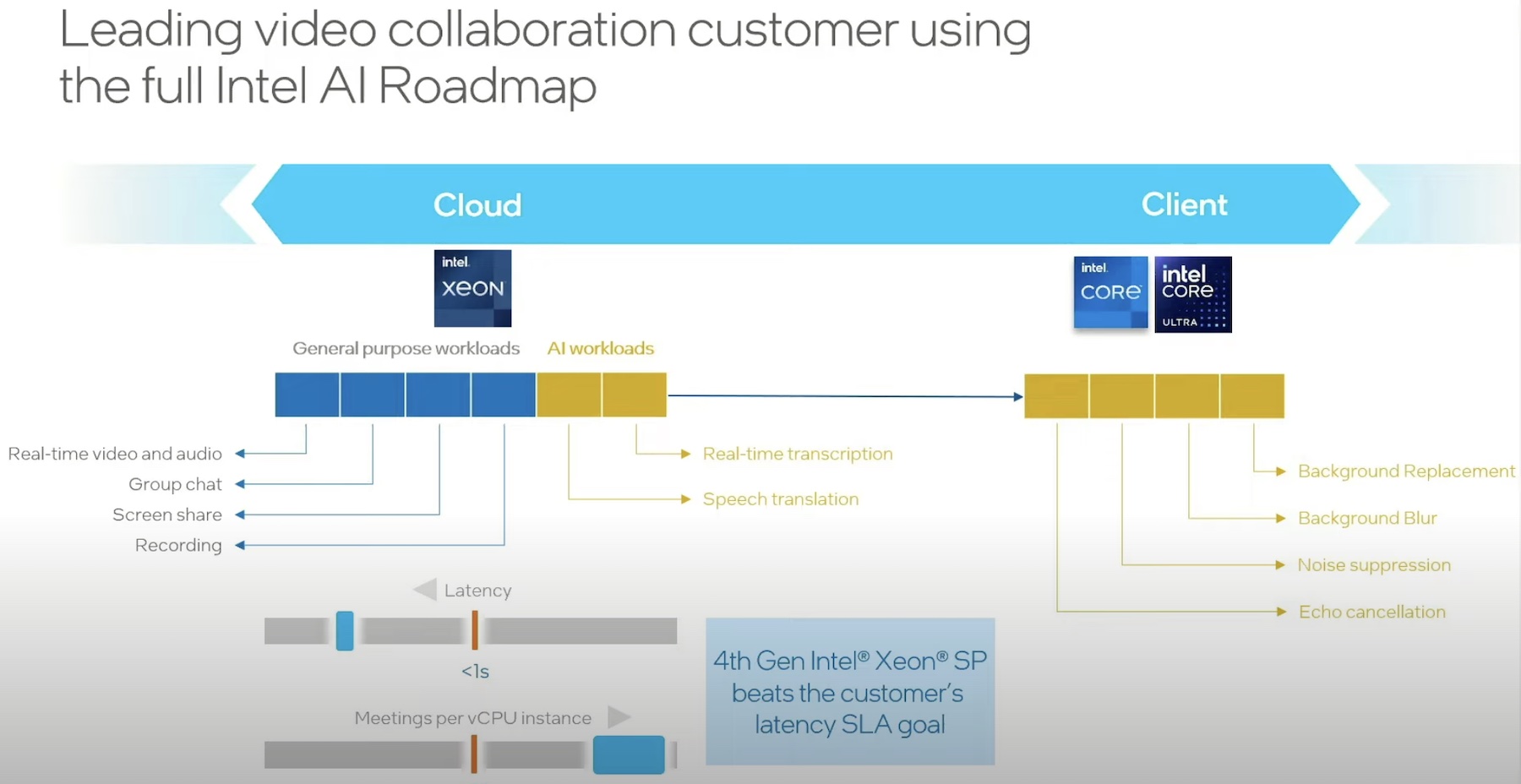

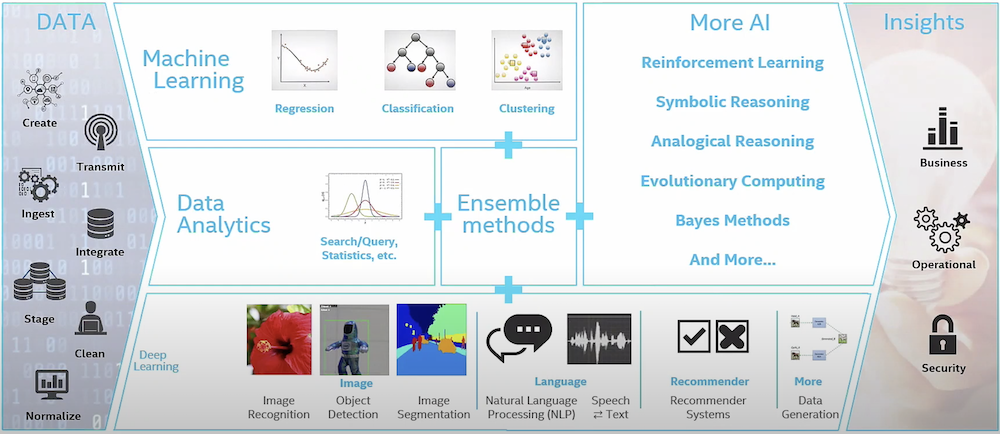

As artificial intelligence capabilities continue to sweep the enterprise IT industry, the challenge many IT practitioners now face is finding ways to extend the power of AI to the places it’s needed most. One such location is at the heart of where much of today’s data and processes are happening: the edge.

By extending AI to the edge, organizations can cut down on compute latency while also improving process efficiency where it matters the most. In return, employees experience more efficiency as well, as the data they need to do their jobs is available to be operated and analyzed right where they’re working.

Traditionally, however, the infrastructure required for AI operations has been too large in scale to be feasible at the edge, where oftentimes, employees may be working from laptops or even mobile devices. Add on top of that the general decentralization of IT resources we’ve seen over the past year, and it’s understandable that AI at the edge has long been considered impractical. Intel’s new generation of Xeon scalable servers seeks to subvert that.

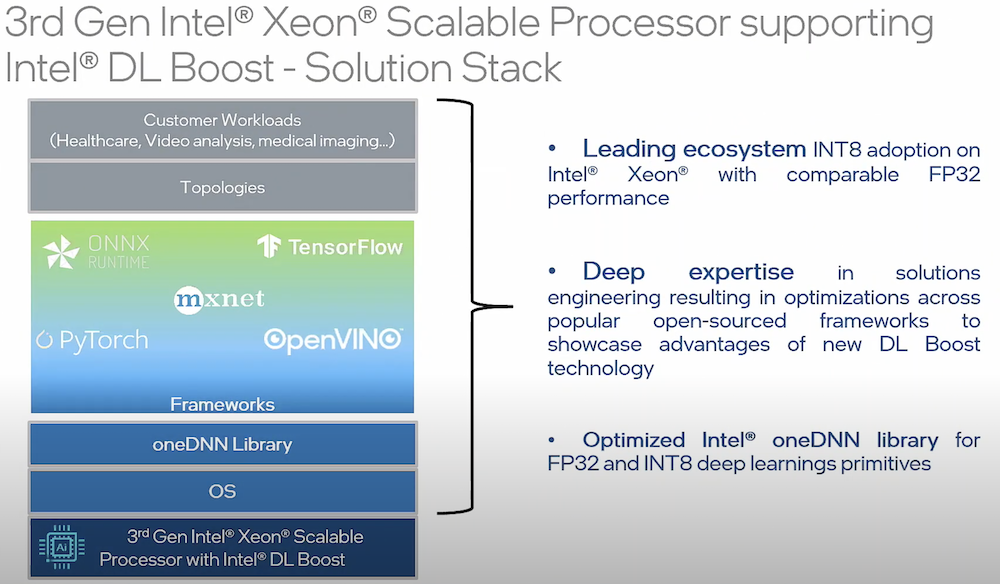

Intel’s 3rd Gen Xeon Scalable Platform and DL Boost

Intel’s announcement of the third generation of Xeon earlier this year set the industry alight. Code-named “Ice Lake,” the new scalable server architecture targets major locations like data centers as well as budding industry needs like 5G networks.

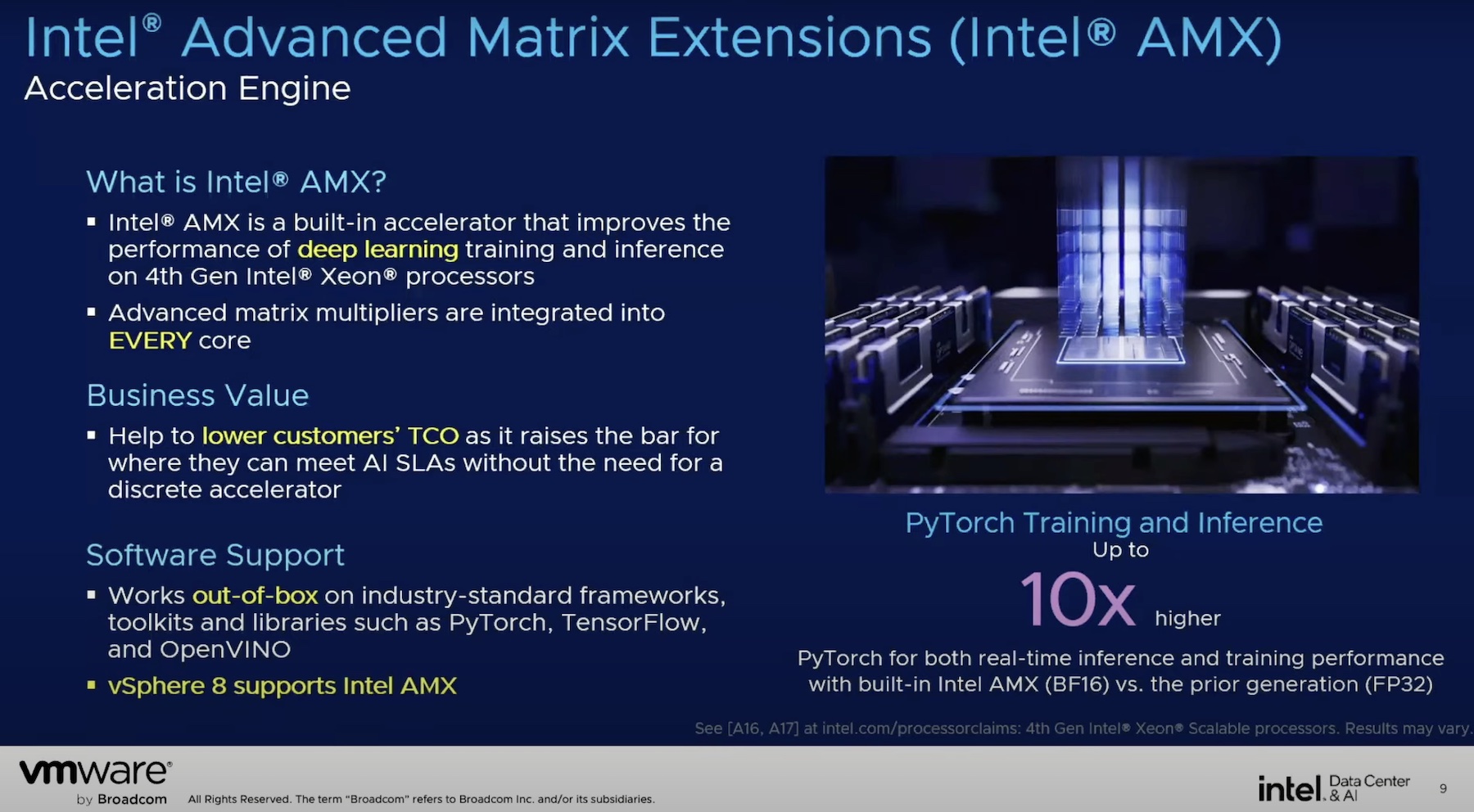

Another key application of Ice Lake is its AI compute capabilities, specifically through their Deep Learning (DL) Boost technology. DL Boost operates on a principle of low precision, which at first sounds counterintuitive to the needs of most modern AI operations. In practice, the low precision model of DL Boost actually requires less silicon area and power, resulting in better cache usage, a reduction in memory bottlenecks, and an overall improvement in operations per second compared to other models.

For edge use cases, DL Boost ensures that, even with a relatively small package size of only 40 cores, Ice Lake can still keep up with the majority of AI-dependent workloads.

Ice Lake and DL Boost in Action

At their exclusive Tech Field Day event surrounding the Ice Lake release, Intel detailed just exactly what DL Boost through Ice Lake is capable of. During the presentation, Intel’s Principal Engineer of their Data Platforms Group (DPG), Rajesh Poornachandran, and Software Engineering Manager, DPEA, DPG, Shamima Najnin, each discussed what Ice Lake and DL Boost have to offer.

In her presentation, Shamima Najnin dove into a use case of Ice Lake and DL Boost featuring Intel customer, Tencent Gaming. She describes how Tencent was able to leverage the new generation of Xeon scalable processors to optimize their PRN model-based 3D facial generation and reconstruction solution. With Ice Lake, DL Boost, and Intel’s Low Precision Optimization Tool, Tencent experienced an over 50% increase in inference speed and a 4.23x improvement in overall performance compared to the last generation of Xeon.

Zach’s Reaction

I can only speak for myself, but based on the buzz of the rest of the industry I’m seeing, Ice Lake is changing the game for edge-based AI computing, and I am excited for all of the new advancements that companies will achieve through using it.

To learn more about how Intel Ice Lake is disrupting the scalable processor market, check out their other Tech Field Day presentations regarding the new generation of Xeon. You can also find more exclusive coverage and reactions from the release on GestaltIT.com, such as this piece about why the platform is an AI workhorse.