From the edge through the network to the cloud, an explosion of data drives the need for flexibility and agility in the products that process, store, and move data. Intel’s launch of their 3rd Gen Xeon Scalable processors, code-named “Ice Lake”, as well as announcements of additional hardware and software solutions, focus on this data-centric problem.

Process Everything

Intel claims to have the most broadly deployed data center processor and has some impressive stats to support this claim. Over 50 million Intel Xeon Scalable processors have been shipped and deployed in various data center environments ranging from cloud to the intelligent edge. Greater than one billion cores have been deployed across more than 800 cloud providers since 2013[1].

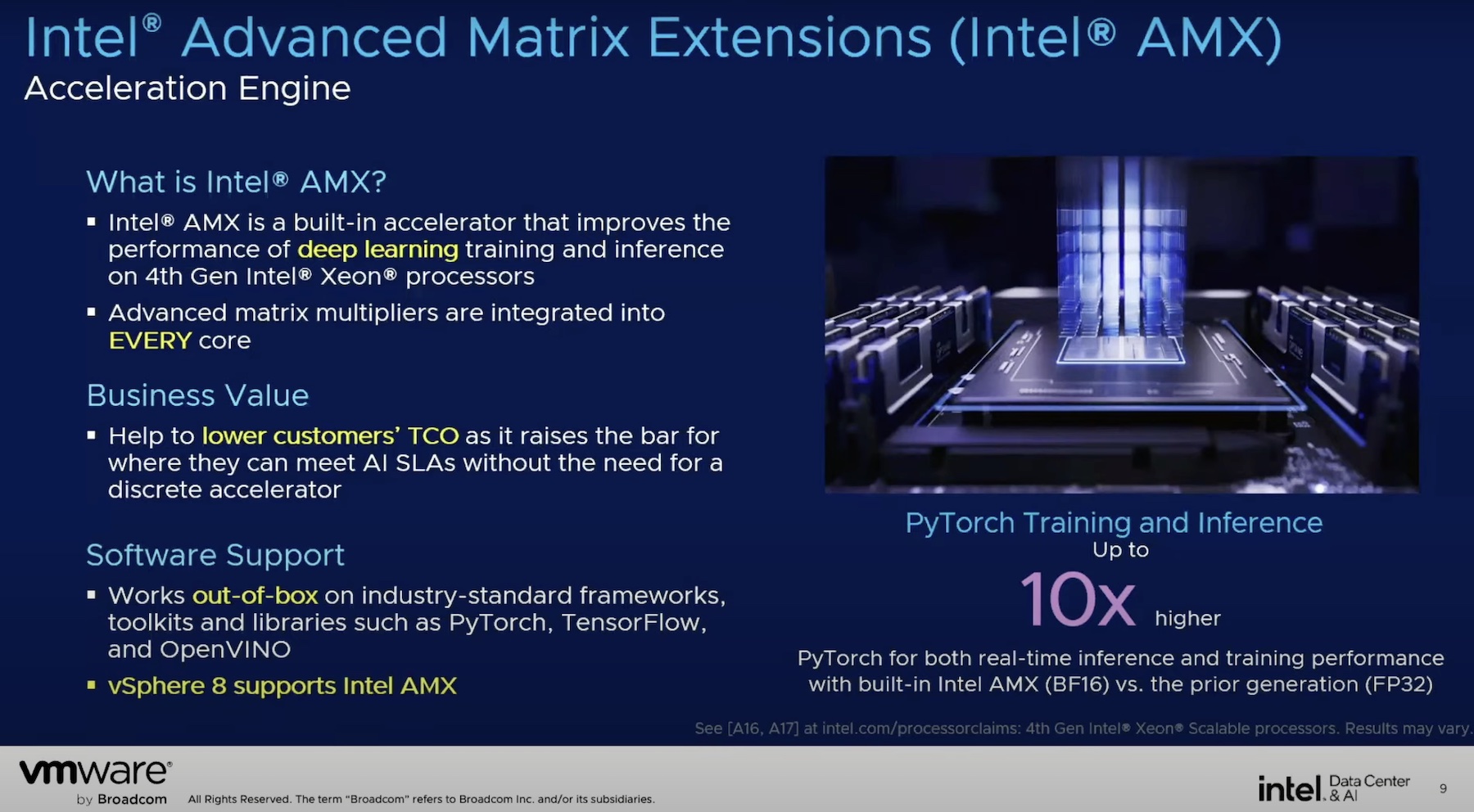

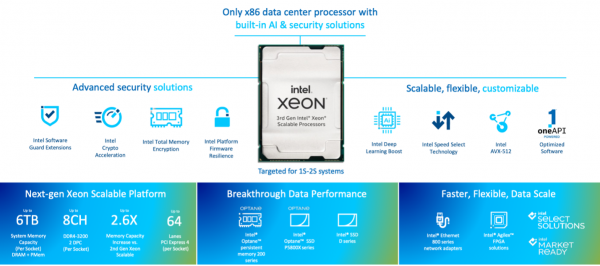

One of the reasons for this wide adoption and deployment is that the 3rd Gen Intel Xeon Scalable processor was built specifically for customer needs. Whether that customer has workloads in the cloud, enterprise, or edge, needs high-performance computing (HPC), or focuses on 5G. It is also the only data center processor with built-in Artificial Intelligence (AI), featuring Intel’s Deep Learning (DL) Boost technology. This feature’s importance cannot be overstated for developers who have had to refactor code when forced to migrate from a CPU to a GPU for performance reasons.

A widespread trend in data center security is to encrypt everything to protect data at rest, in flight, and in use. Built-in crypto acceleration reduces the performance impact of this pervasive encryption. Confidential computing is delivered through Intel Software Guard Extensions (Intel SGX), which delivers the smallest potential attack surface of any Trusted Execution Environment (TEE) available for the data center.

Store More

Memory and storage constitute a significant portion of the overall TCO of a data center. Data-intensive workloads perform best when hot data is stored in memory, but DRAM is expensive and limited in capacity. Intel Optane persistent memory (PMem) 200 Series offers affordable memory capacity with persistent performance for many applications. It acts as fast storage on the memory bus and avoids the input/output (I/O) bus latency. Data put on the module is always secured with application-transparent AES 256-bit hardware encryption, which enhances security without code changes or sacrificing performance.

Workload intensity increases in Hyper-Converged Infrastructure (HCI), Artificial Intelligence (AI), High-Performance Computing, and Database, place greater demands on storage, making NAND storage a performance bottleneck. However, rather than using Intel’s Optane SSD P5800X storage devices to replace NAND SSDs, they are used to complement them. There are three primary storage acceleration use cases where NAND SSDs back Optane SSDs:

- Placing performance-sensitive data on Optane.

- Using Optane as a write cache.

- Data tiering where recently used data, “hot” data, is kept on Optane while older data, “warm” or “cold” data, is kept on NAND.

Move Faster

Intel’s Ethernet 800 Series network adapters offer up to 100Gbps per port and 200Gbps in a single PCIe slot for bandwidth-intensive workloads such as those found in communications networking, cloud networking, Content Delivery Networks (CDN), high-bandwidth storage targets, and HPC/AI fabrics.

The Application Device Queues (ADQ) feature prioritizes application traffic to help deliver the performance required for high-priority, network-intensive workloads.

Enhanced Dynamic Device Personalization (DDP) uses the fully programmable pipeline to enable frame classification for advanced and proprietary protocols on the adapter to increase throughput, lower latency, and reduce host CPU overhead.

Come for the Components, Stay for the Platform

Requirements for a typical data center are low latency, high throughput, consolidation of workloads, performance consistency, elasticity, and efficiency. Data centers need to perform at scale while maintaining an acceptable TCO. To support these requirements, systems need to be architected with scale and balance.

From the processor to the memory, SSDs, FPGAs, and Ethernet adapters, Intel has increased scale and performance across all technology pillars. The balance comes from increasing the performance of the connective tissue between these pillars. Memory bandwidth has increased as has the IO bandwidth with the adoption of PCIe Gen4.

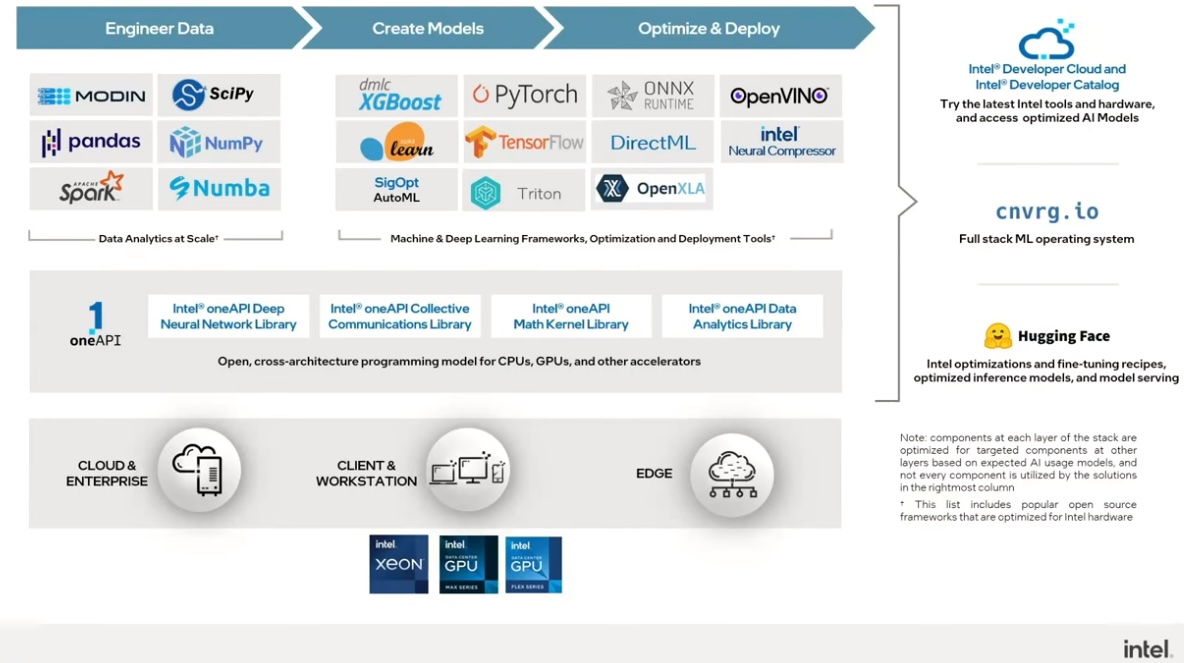

Hardware is great, but software is needed to get the best performance out of the latest 3rd Gen Intel Xeon Scalable processor architecture and capabilities. Software allows customers to drive tremendous flexibility into the entire platform. Intel’s oneAPI allows optimization of the processor to run a particular workload. Storage acceleration can be customized. The pipeline on the network adapter is fully programmable. The options for customization are almost endless.

Intel has decades of successful solution delivery experience, and the 3rd Gen Intel Xeon Scalable processor continues that trend, allowing customers to solve for anything.

Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries.

[1] Source: Intel internal estimate using IDC Public Cloud Services Tracker and Intel’s Internal Cloud Tracker, as of March 1, 2021