Apple’s third-generation iPad Pro is here, and it’s a killer. Not only does it advance the iPad formula in an unprecedented way but it proves that Apple’s in-house chip design team can beat Intel and AMD at their own game. This should terrify them, especially if Dell and other system vendors read the tea leaves the way I do. We’re in for some major changes in the computer chip world!

A Decade of Chipmaking Progress

Apple was instrumental in creating ARM way back in the 1980s but it was their decision to use the architecture as the basis for the iPhone in 2007 that cemented it as an industry leader. Nearly every mobile device now uses ARM cores thanks to their solid performance and thrifty use of power. But mobile ARM cores have always underwhelmed in terms of absolute performance (especially floating point) and I/O. Although there have been many attempts to bring ARM to laptops, desktops, and servers, the architecture has seen limited success outside portable devices.

But portable devices are Apple’s focus now, and the company has been enhancing the performance of their iPhone and iPad for a decade. After investing in in-house ARM development resources starting with the 2008 acquisition of P.A. Semi, Apple released their first “in-house” ARM chip, 2010’s A4. The company is said to have been dissatisfied by the progress and focus of third-party ARM designs and wanted to enhance their products with custom chips.

And enhance they did! The A4 wasn’t very impressive, but each succeeding generation has leaped forward in terms of performance and features. The dual-core A5 was followed by the A6 with custom-designed “Swift” cores for the iPhone 5 and fourth-generation iPad. Then came the A7 with its “secure enclave” for Touch ID and the triple-core A8X for the iPad Air 2. The A9 featured the M9 motion co-processor as well as the advent of an Apple-designed NVMe storage interface. The A10 Fusion adopted a “big/little” combination of 4 cores (in the iPhone 7) and 6 cores (in the iPad Pro) and saw Apple beginning to switch from PowerVR-based graphics to an in-house GPU. The A11 Bionic was the first to allow all these cores to be active at once, and also added dedicated neural network processors to the mix. This brings us to the current A12 Bionic, which features 6 or 8 custom CPU cores, 4 or 7 custom graphics cores, and a next-generation “neural engine”.

As you can see, each revision of the Apple A-series CPUs moved the design further from third-party IP and added serious performance features. And the performance of these chips has rocketed forward, from the A7 challenging low-end Intel mobile chips to the astounding performance of today’s A12X, which bests most high-end desktop processors.

Checking the CPU Numbers

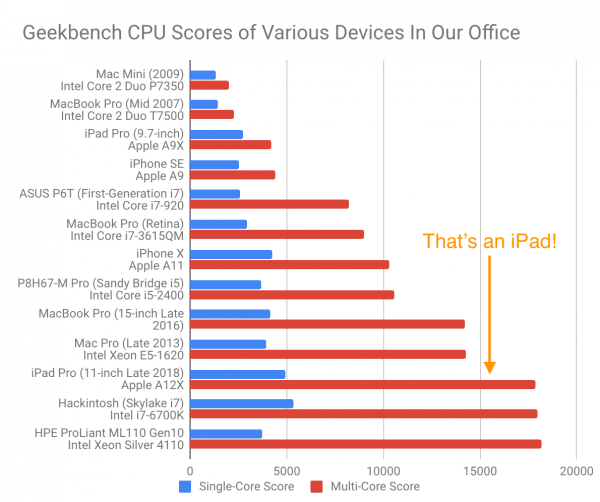

Comparing my new 2018 iPad Pro to other devices I use regularly is really eye-opening. It ranks near the top in both single- and multi-core CPU performance even when compared to serious desktop and server hardware, like my high-end Skylake i7-6700K desktop and 8-core Xeon server! And those are hot, power-sucking chips (drawing 85+ Watts). This small battery-powered device is one of the fastest devices I own.

I tested various devices in our office, and the iPad Pro is one of the fastest in both single-core and multi-core CPU performance!

And Apple’s progress is really astounding. When the first-generation iPad Pro came out in late 2015, I was amazed to see that the A9X was so much faster than the Intel Core 2 Duo chips in my old Mac Mini and MacBook Pro. When I got the iPhone X, I was shocked to see that the A11 outperformed the Intel Core i7 in my old Retina MacBook Pro and boasted single-thread performance faster than my new Touch Bar MacBook Pro. The new 2018 iPad Pro A12X soundly beats them both.

I know that Geekbench isn’t the end-all, be-all of performance tests. And some of this hardware is a little old. But it’s clear that Apple has designed a monster CPU that offers performance in the same class as Intel’s latest Core processors. Plus, Apple and TSMC beat Intel in manufacturing: The A12 is the first 7 nm class CPU in consumer hands.

Performance Beyond the CPU

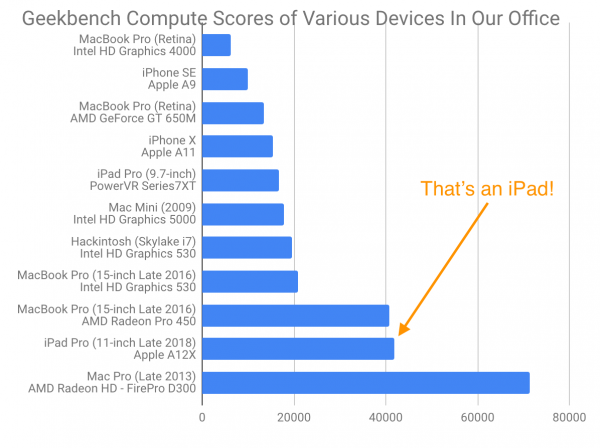

And it’s not just regular integer performance that has improved. Apple has made amazing progress on the GPU side, passing Intel’s integrated GPUs, and has added “neural network” processing, encryption, and impressive storage capabilities. With Moore’s Law coming to an end, and CPU core count escalating, it is features like these that will differentiate future processors.

Let’s start with the GPU. As noted, Apple had been using off-the-shelf GPU designs from ARM and PowerVR until they struck out on their own in 2017. After watching Intel struggle with in-house GPU designs for two decades, I suspected that Apple would simply de-emphasize this element of the chip in favor of alternative offload hardware. After all, they had already delivered the M-series motion co-processors and T-series security chips.

But a funny thing happened that year: Apple did both! They delivered a “neural network” processor and solid GPU performance in the A11! The iPhone X was comfortably fast, even with its new high-resolution screen, and had new image-processing tricks like Face ID.

Apple’s latest GPU trounces Intel’s iGPU and even reaches the level of discrete offerings from AMD

Then came the 2018 iPad Pro. Apple leapfrogged the mainstream integrated GPUs offered by Intel and started offering similar performance to mainstream discrete GPU products from AMD. No, we’re not talking about Vega or NVIDIA Tesla levels of performance. But those are hot and expensive add-ons, not integrated on-chip with a CPU.

Then there’s the A12X Neural Engine. Although we don’t have a good way to compare it to other products, Apple claims that it is 8x faster than last year’s debut model in the A11. Although Intel is active in AI and ML, they’ve never offered a compelling offering even in the professional space. NVIDIA rules that roost with Tesla, but this isn’t a feature you can “get” in a mobile device made by anyone but Apple.

Apple was also the first to deliver serious storage performance in an ARM device, adding NVMe to the A9 way back in 2015 and integrating their own NAND flash controller. Then they created the T2 for the 2017 iMac Pro. This was the first ARM chip with dedicated encryption and RAID-like SSD controller functions, and it’s now used in the MacBook Pro and Air, too. T2-equipped machines are near the front in SSD performance when compared to alternatives from Marvell, Samsung, and so on. And the T2 also acts as a video encoding offload engine in its spare time. Although the iPad Pro doesn’t have a T2, it does have similar hardware offload built in.

What Will Apple Do?

Intel’s failure to deliver much GPU performance, or even to advance the performance of existing iGPUs, must be a continuing source of irritation for Apple. And the same must be true of the frequent delays and supply constraints on their mobile CPUs. It must be galling for them to be advancing the state of the art in phones and tablets so quickly and yet watching their laptop and desktop systems languish.

In the 1980s, Apple watched Motorola fall behind in developing a successor to the 68000 then used in the Macintosh. Their response was to form the “AIM” alliance with IBM and Motorola to push for an all-new CPU architecture, PowerPC. The result of this investment was a compelling advance to the platform, Power Macintosh.

But AIM in general, and Apple in particular, didn’t have the resources to stay competitive with Intel. Rather than continue to offer lackluster products, Apple jumped ship again, announcing a switch to Intel CPUs in 2005. This new partnership benefitted both companies, with Apple offering high-end machines even as the rest of the PC industry chased the NetBook trend.

Now Apple faces a similar decision point. Their Macintosh line is held back by Intel’s CPU offerings, with delays and supply constraints compounding the lack of innovation in silicon. Machines like the MacBook Pro are compromised by Intel’s decisions (e.g. the debacle of the 16 GB RAM limit), delayed by lack of supply, and forced by poor iGPU performance to add discrete GPUs from AMD.

At the same time, Apple is leaping forward in CPU and GPU performance and adding compelling new features like the Neural Engine and T2 co-processor. And they just delivered a home-grown iPad Pro that whips their Intel/AMD MacBook Pro in just about every way. The only thing the iPad Pro lacks is macOS!

Change is Coming

The writing is on the wall. Given the success of their hardware design, development, and production in iPhone and iPad, it’s inevitable that Apple will transition the Macintosh to custom ARM chips. When they do, Apple’s products will leapfrog the PC industry, thanks not just to raw CPU performance but also to compelling co-processors for graphics, ML, storage, and multimedia.

What will Apple’s transition mean for the rest of the industry? Intel’s ecosystem of system vendors are champing at the bit to advance the state of the art and will surely see Apple’s defection as a bellwether. But they don’t have in-house chip design talent or Apple’s financial resources and iOS install base.

Vendors have been defecting to AMD in the x86 desktop and server market, but outside mobile the ARM ecosystem is in shambles. The Avago/Qualcomm/NXP/Marvell dance is the result of a decade of failure to push ARM anywhere but mobile and IoT. Although products like X-Gene and Centriq looked promising, uptake was slim because the fragmented ecosystem wasn’t ready to absorb them.

Apple’s vertical integration is the ultimate lesson that other companies must learn. Now that IBM owns Red Hat, they could re-enter the market for integrated server hardware. HPE, Lenovo, and Dell might have trouble pushing integrated compute solutions, but a large portion of their revenue already comes from non-compute storage and networking hardware that could move to ARM. And Dell’s footprint in client computing and servers means that could follow Apple with just a few acquisitions.

But I don’t see anyone following Apple in mobile and client computing. The tug-of-war between Google and the dominant Android vendors will continue to derail any serious challenges in mobile, and the same is true of Microsoft and client device vendors. Although Google and Microsoft produce excellent hardware, sales volume isn’t anywhere close enough to justify Apple-level hardware development resources.

Stephen’s Stance

I’ve long been skeptical of an ARM transition for Apple Macintosh, but the 2018 iPad Pro has made me a believer. Apple will switch to in-house hardware and this new generation of “ARMacintosh” computers will blow away the rest of the client computing market. And the only way a company could challenge Apple’s escalating dominance in mobile and tablets would be a radical new device.

Oh, and here’s my light-hearted unboxing and first impressions of the 2018 iPad Pro!

My first time commenting.. but…

About the dumbest bit of “ journalism “ I have seen. Either the creator is inept to how technology and benchmarking works or failed at researching and circulate thinking… or maybe is being intentionally obtuse.

First of all, single benchmark suit, and very flawed benchmark suit at that. Geekbench doesn’t take advantage of any of the extensive extensions on offer with X86-64 that make X86-64 incredible difficult to replace, like good luck doing AVX512 on ARM and before go crying nothing uses that, technically you are wrong, anything uses AVX can and will get a boost from AVX512 and AVX256 and AVX2, this includes rendering, encoding in real time, raytracing 3D models, 3D model suits, CPU accelerated CAD/Solidwork models etc etc. but yet it does take advantage of extensions ARM offers which is pretty unfair. It’s a biased test that pretends to attempt to compare unrelated platforms like ARM to X86-64 and yet it does about worst job at it.

Next you said that GPU matches Radeon discrete graphics…okay, sorta, apart from RX450 is slower than vega 8 integrated graphics (which can be found in APUs in laptops like Ryzen 2500U and desktops like Ryzen 2200G) by fairly large margins in reality. I believe RX450 is basically underclocked R7-270 from forever ago. And D300 GPUs are very old and unoptimized basically discontinued years ago before Apple even used them! Compare it to Vega 20 or Vega 64 in Macs (without thermal limitations) and you will find its walked all over on the compute side of geekbench because getting easily over 130-160K points in geekbench compute… hell my massively thermally limited 2700U with Vega 10 (10 CU’s) and appallingly bad drivers on windows manages 38,600 points easily, and desktop 2400G (Vega 11) with 3200Mhz DDR4 and 1600Mhz iGPU clock (easily achieved with enough Vcore and cooling) manages just over 50K points in computer…stock it manages ~45K points. that is integrated graphics beating RX450 dGPU.

Thank you for commenting. I appreciate hearing your perspective on this.

man, the article is so wrong in so many places. both x86_64 and arm have its own pros and cons and a totally different field of expertise. You are just comparing a deer (x86) how fast it runs underwater (arm environment).

i guess you were after all

Now Apple has left Intel, and the M1 MacBooks are the benchmark for ultrabook AND they’re a bargain. Dang.