Intel’s OpenVINO Toolkit enables domain experts, not just data scientists, to deploy high-performance deep learning at the edge.

While artificial intelligence (AI) is a big broad topic, many of the most common AI solutions are built on some form of machine learning (ML). One of ML’s most popular forms is deep learning (DL), which takes advantage of deep neural networks (DNNs).

For familiar readers, the concepts of AI/ML/DL/etc., conjure up images of massive GPU farms in giant power-hungry datacenters. It also raises thoughts of highly specialized data scientists using advanced mathematics to make sense of enormous data sets.

Those images are not wrong, but they are incomplete.

AI at the Edge

It turns out that ML is a two-sided coin. Those visions of data scientists shoveling big data into hot GPUs are representative of building and training models. This training is one side of the coin. However, once a model is trained, it has to be put to use. It needs to infer a correct result based on new (unseen during training) data. Call this the inference side of the coin.

This is where it gets interesting because, for many deep learning applications, the use case is out at the edge, not inside that perfectly provisioned datacenter (aka ‘the cloud’).

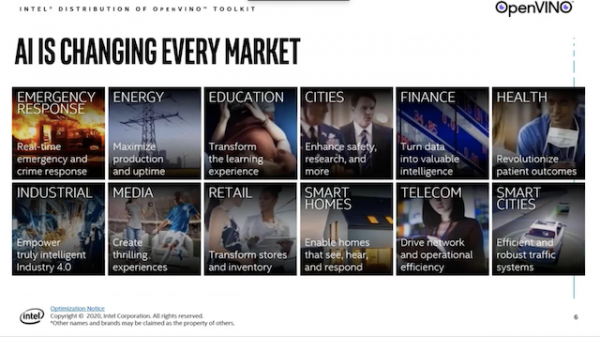

In this case, when I say ‘the edge’, I mean the point where we all work and play. For example, video inferencing (computer vision) can detect defects in factories, find anomalies in medical images at hospitals, and even stop theft at retail stores. Voice recognition and recommendation engines are other use cases that are best used at the edge. Believe me when I say that Siri is just the tip of the iceberg in this field.

AI for All

The astute reader has already spotted the first challenge. We can’t afford to put a big datacenter full of hot GPUs into every location where we might ever want to leverage an AI solution. We can’t afford (in money or time) to ship every bit of data back to a central brain for processing either. That means we need high-performance deep learning inference at the edge – and we need it to function on essentially any hardware available at that location.

There’s also a second challenge hiding here. There are many more potential AI applications and solutions in the world than there are data scientists. Ideally, we could enable domain experts to re-purpose an existing model for their specific use-case without needing to wait in line for a data scientist to do it for them.

To enable AI for all, we need a tool that enables domain experts to develop and infer using DL models on right-sized hardware at the edge.

Enter OpenVINO

Lucky for us, Intel developed the OpenVINO (Open Visual Inference and Neural network Optimization) toolkit.

At the highest level, OpenVINO is free for use software that enables high-performance deep learning inferencing on Intel hardware.

More formally: It “is a comprehensive toolkit for quickly developing applications and solutions that solve a variety of tasks, including emulation of human vision, automatic speech recognition, natural language processing, recommendation systems, and many others. Based on the latest generations of artificial neural networks, including Convolutional Neural Networks (CNNs), recurrent and attention-based networks, the toolkit extends computer vision and non-vision workloads across Intel® hardware, maximizing performance. It accelerates applications with high-performance, AI, and deep learning inference deployed from edge to cloud.”

OK, but what does that mean? Essentially it solves some of the stickiest AI challenges by doing two things:

- It makes it easier for developers to add artificial intelligence to their applications

- It ensures those applications can execute at the highest levels of performance across any Intel hardware architecture

While that is especially useful at the edge, it is also helpful in the cloud and other big datacenters, where you are likely to find plenty of Intel hardware ready for even bigger inference jobs.

Well, what are you waiting for? Go download and check out OpenVINO today: https://software.intel.com/content/www/us/en/develop/tools/openvino-toolkit/download.html