I recently had the privilege to attend the Gestalt IT Showcase which focused on the Intel ecosystem, in relation with a white paper sponsored by Intel titled « Digital infrastructure at datacenter scale ». Before reading my article, I strongly encourage you to watch the excellent presentations by Ather Beg, Alastair Cooke, and Craig Rodgers.

Among great presentations, Craig’s hit more than a chord: I’ve been following several technologies (notably memory and storage) for years now and Compute Express Link (CXL) was on my radar since 2019, particularly because of the now defunct Gen-Z consortium and specifications (more on Gen-Z later, but the important thing is that Gen-Z kind of “merged” into CXL) last year.

CXL refers to both the CXL Consortium, including a very broad set of industry players that are CXL members, particularly among hardware and memory manufacturers, and the CXL Specification. The CXL Specification defines the standard, and was primarily developed by Intel. In this article, any further mentions of CXL will relate to the specification.

Understanding CXL

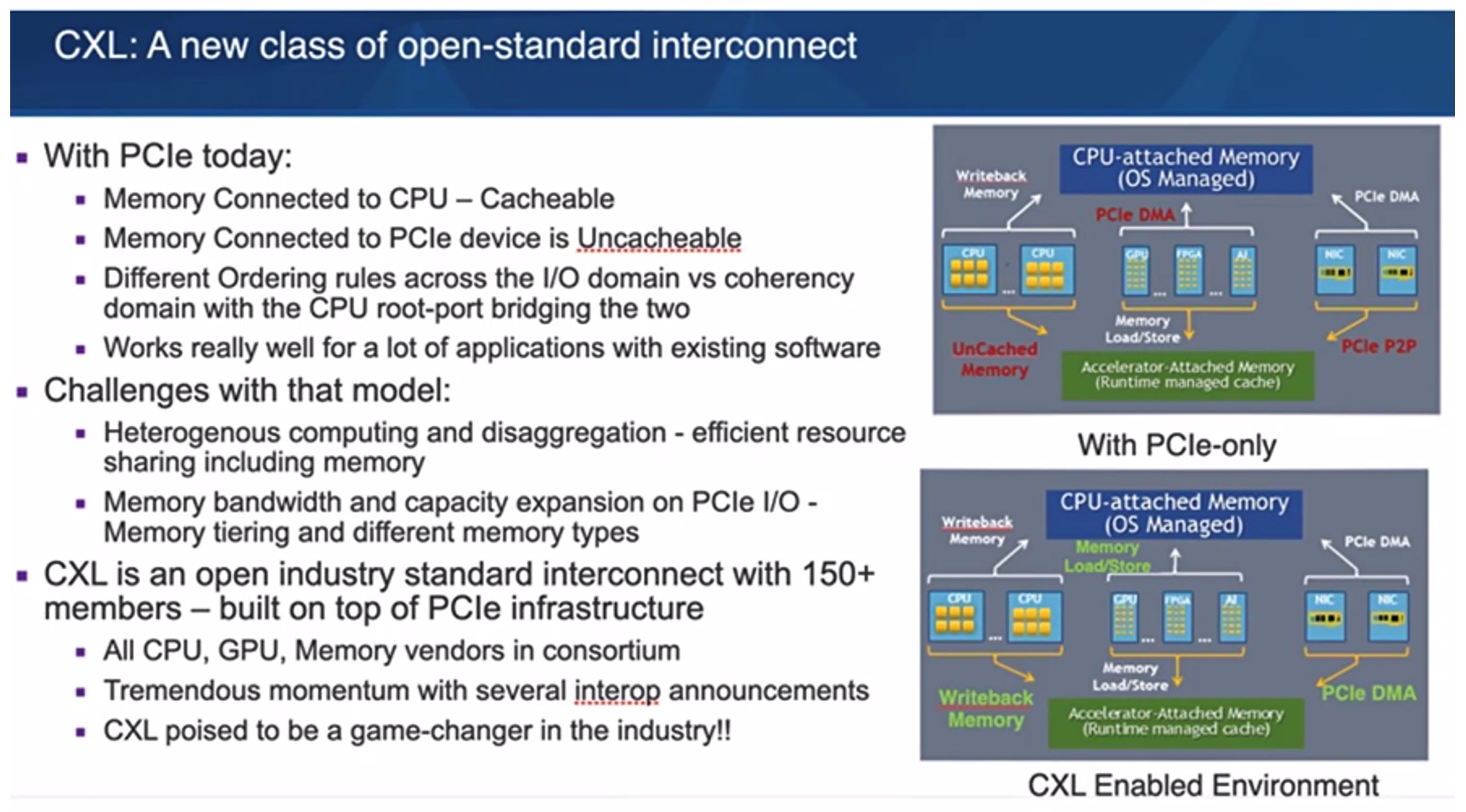

Compute Express Link is an open standard based on PCI Express (PCIe), which enables high-speed CPU-to-memory and CPU-to-device connections. In the interest of readability, we will keep some of the definitions short. The full CXL specification can be downloaded here (registration wall).

CXL supports various device types and includes three separate protocols: CXL.io (transport / management layer, technically equivalent to PCIe 5.0), CXL.mem (memory access), and CXL.cache (access and cache host memory), each of which enables a specific set of actions and use cases.

The standard also recognizes three primary device types:

- Type 1 devices: Usually SmartNIC accelerators without local memory

- Type 2 devices: Usually GPUs, ASICs, and FPGAs, all of which are devices with local memory

- Type 3 devices: These devices expand a host’s total memory pool and usually consist of memory expansion boards or storage class memory (SCM) such as Intel Optane.

In addition to this, the CXL specification is versioned. The latest specification (CXL 3.0) is based on PCIe 6.0. However, as of today, the newest available CXL specification supported by hardware is CXL 1.1, available on Intel Sapphire Rapids processors.

CXL, Storage-Class Memory, and Intel Optane

One of the areas I’m most excited about with the CXL specification is support for Type 3 devices, particularly storage-class memory. As someone who has written quite a fair share of content on Intel Optane, I was saddened (but not surprised) by Intel’s announcement of ending Optane development.

Is it such a challenge though? Optane was unique and revolutionary in its own way. It opened the door to applications and use cases that we couldn’t think of, and was very good for infrastructure consolidation, as the only way to drastically increase the amount of memory on a system at a fraction of the DRAM price.

Nevertheless, the technology was proprietary and tied to a closed standard. With the emergence of CXL, it was initially expected that Intel would re-engineer Optane for CXL, but the divestment of its memory business put a halt to these speculations.

In any case, Optane’s demise is far from the end of storage-class memory. On the opposite, the shift to an open standard will foster broader adoption of SCM, enhance interoperability, and open the door to new use cases.

Transformational Outcomes

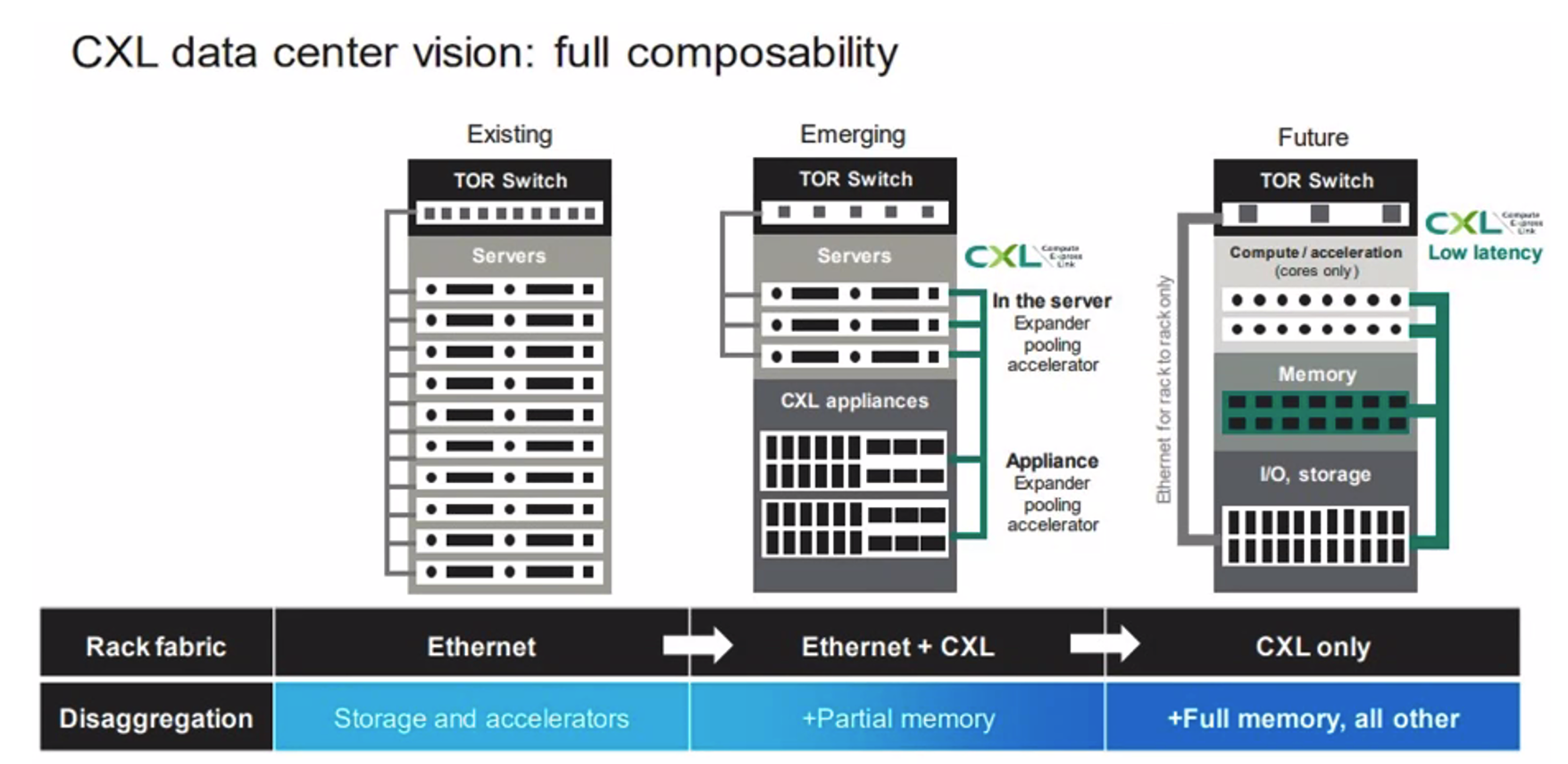

Furthermore, future releases of the CXL specification will be even more transformative. Currently, even if CXL allows cross-component and cross-server memory addressing, we are still bound by a limiting construct that is the server chassis.

CXL opens the way to radical changes in the data center and touches back to my earlier mention of the Gen-Z consortium. The vision of Gen-Z was to build an infrastructure composability standard where all the components that are located within a server chassis would be fully disaggregated.

Right now, the core component of a server is the CPU, and all (most) of the communication between elements in a computer goes through the CPU. With the merging of the Gen-Z specification into the CXL specification, it will be possible in the future to build fully composable architectures where processors, memory, GPUs, accelerators, storage, and more components are fully independent and interconnected via CXL.

The impact on data center architectures will be massive. Organizations will be able to flexibly scale their infrastructures, adding trays of memory, GPUs, or CPUs without the limitations inherent to the current server chassis.

Those new fully composable architectures will also impact how resources are allocated, how servers (whether virtual or “logically physical”) are partitioned. Security and availability too will be hot discussion topics in the future.