AI is a hot technology buzzword. Businesses are eager to use AI to make sense from an enormous amount of data to drive business data. To do that, there needs to be an investment in architecture and software. Can you prove AI architecture performance and make a better infrastructure buying decision?

When I was asked by Gestalt IT to sit in on an Intel Cloud Showcase, I was excited to see how the industry has progressed since I left VMware. It turns out VMs would be a sticking point in the conversation with Accenture.

My background with AI VMs

At VMware, I was in the vSphere BU. I was fortunate enough to find a mentor in the Office of the CTO, Josh Simons, who taught me about all things AI/ML. I’ve blogged about AI infrastructure, and even did a VMware session three years ago with Dell GPU expert Tony Foster.

I’m well-versed on the architecture for these workloads. And that’s the key – AI applications are workloads. You need to build architecture to maximize performance to the workload’s requirements. At VMware, all of my presentations had AI architecture performance images, as we had to work hard to convince on-premises data scientists that VMs were better than bare metal.

Turns out, performance is what this session was about.

About performance marketing

Performance testing is an important part of marketing for technical vendors. It is the validation of claims a company makes. See if you recognize the pattern:

What is the customer problem?

In a sales or marketing presentation, you’re going to hear about “a problem in the industry today”. This is “the why” a product was built. This presentation did a nice job of that.

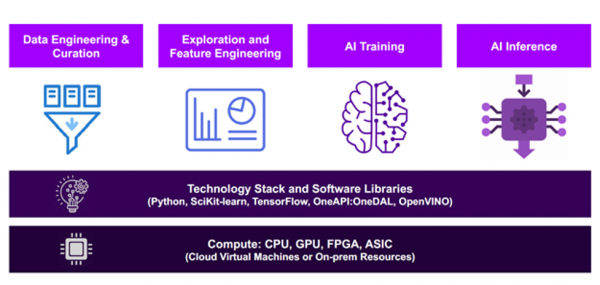

They described how AI is done in several compute-intensive stages. The faster and more economically you can work through the stages, the faster an organization can get the answers from AI. Of course, all of that will rest on the AI architecture performance.

How do Accenture and Intel solve the AI architecture performance problem?

They try to find ways to make AI platforms more efficient by improving the hardware infrastructure and optimizing the software running on that architecture.

They do this using VMs in the cloud. In addition to believing everyone is going all-in on cloud, they also can use the public cloud provider t-shirt-sized VMs in the cloud to measure performance and make recommendations to their customers. That solves the architecture bit. To optimize the software they use an Intel-specific python, extensions for SciKit-learn, and OneAPI:OneDal to help their clients.

They claim they use this expertise to help clients navigate choices that impact performance and cost savings.

What proof do they have?

Does Accenture have any real proof that their recommendations work? In this case, they do!

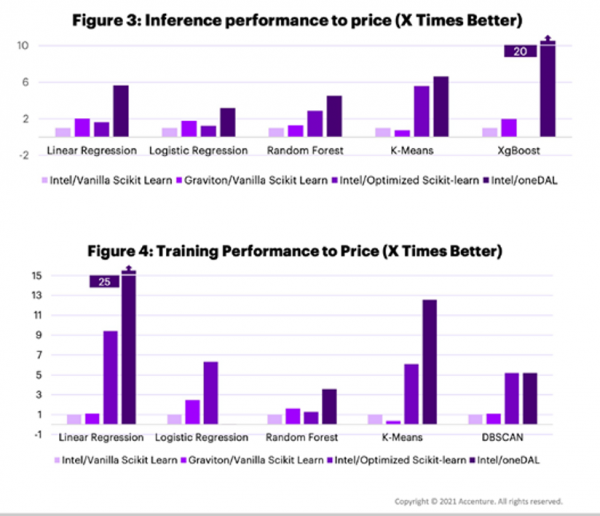

They took performance benchmarks showing first how much better the performance was when the software was optimized for different types of training and inference jobs. They compared performance on “vanilla” Intel VMs (Cascade Lake) and Graviton VMs (AWS ARM).

Next, they layered on the costs of the VMs they tested.

Things that make you go hmmmm

This was a nice presentation with some interesting performance numbers. I do think it would be helpful to label the charts. Which cloud? We can infer AWS for the Graviton VMs, but Intel is in every cloud, even on-premises cloud-like environments. Which Intel chip ran on the test? And which AWS Intel VMs are the vanilla ones? Could the vanilla VMs be KVM or ESXi VMs on-premises? We really don’t know, so this information would be useful to have in these charts.

Additionally, is it really best for these workloads to be on a cloud, or should at least part of them be on-premises? I’d love to see that in the mix, especially with costs.

Speaking of costs, what parameters were included in the cost comparisons? Storage, networking charges? Moving lots of data in and out of a cloud can also get expensive.

I want to be clear; this isn’t a knock on the performance data provided. I think it is good, and I love the idea that these are benchmarks collected as things change on the cloud platform. This data over time will be very valuable for customers trying to make good decisions about how to run AI workloads in the cloud. The things I’m pointing out would just make their information bullet-proof.

Real talk about AI architecture performance

This was a very good presentation, but I would have also liked to see the basics. Basics in performance marketing are showing your assumptions as well as your starting points. Show us what you used to validate your proof points! There is really nothing better for a product marketing strategy than solid performance proof points. They are a lot of work but make it so easy to prove your product can close business gaps.

But, don’t just take my word for it. Watch Accenture’s Tech Field Day Showcase presentation with Intel to see for yourself how their new AI offering affects performance. You can also visit Intel.com or consult your Intel sales representative for more information.