Introduction

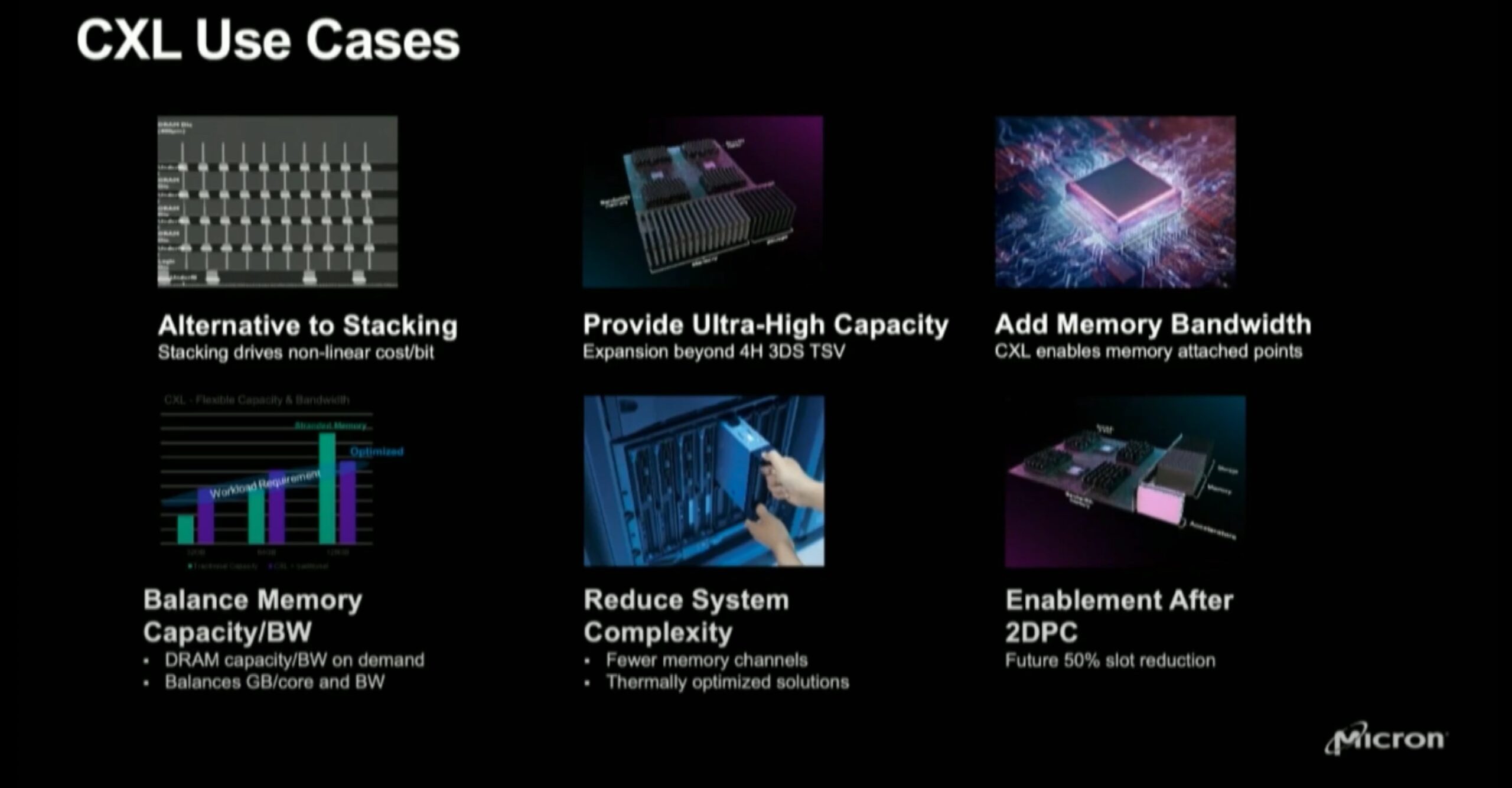

On October 20th, 2022, Micron took part in the Open Computing Project’s CXL Forum. The forum was a marquee part of the OCP’s Global Summit that entailed an entire day of research, describing plans for CXL, and showing off both current and future CXL technologies. Micron’s session, which was nothing short of excellent, discussed CXL’s market outlook in terms of just a few of the technology’s use cases.

CXL’s Capabilities, Past and Present

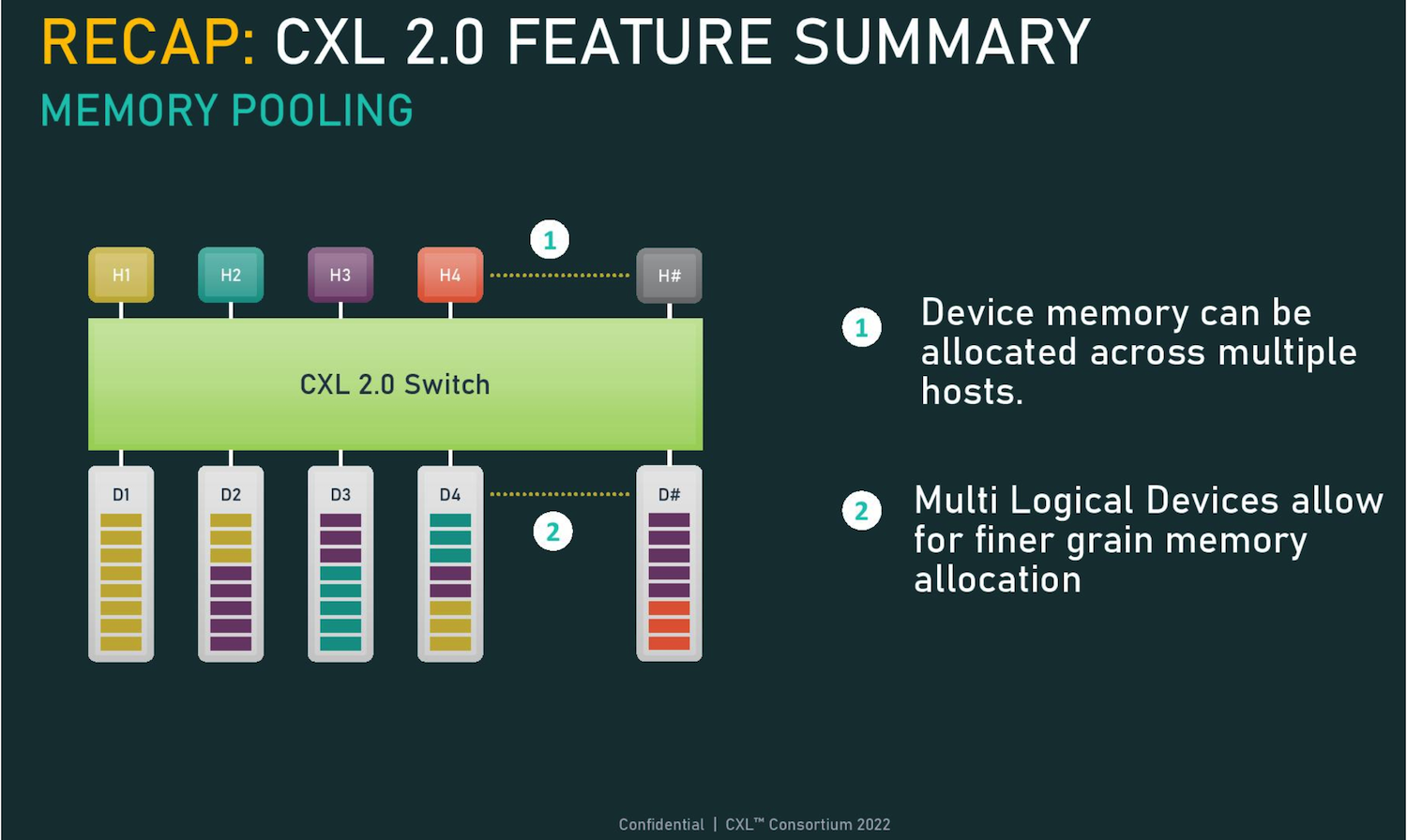

Compute Express Link (or “CXL”) is a developing standard for linking together devices via PCIe that previously only communicated inside of a server. The kinds of devices that can be linked depend on the version of CXL under discussion. For example, in 2019, CXL 1.1 introduced the ability to communicate directly from the CPU in one rack-mounted server to a memory expander located either in the server, or on another rack-mounted node entirely. CXL 2.0, introduced in 2020, added support for single-level CXL switch so that systems could connect to multiple CXL-compatible devices via a fabric instead of requiring 1:1 connectivity. For 2022, the CXL 3.0 release describes connections that can use PCIe 6.0, multi-level switching, and a double total bandwidth, among many other features.

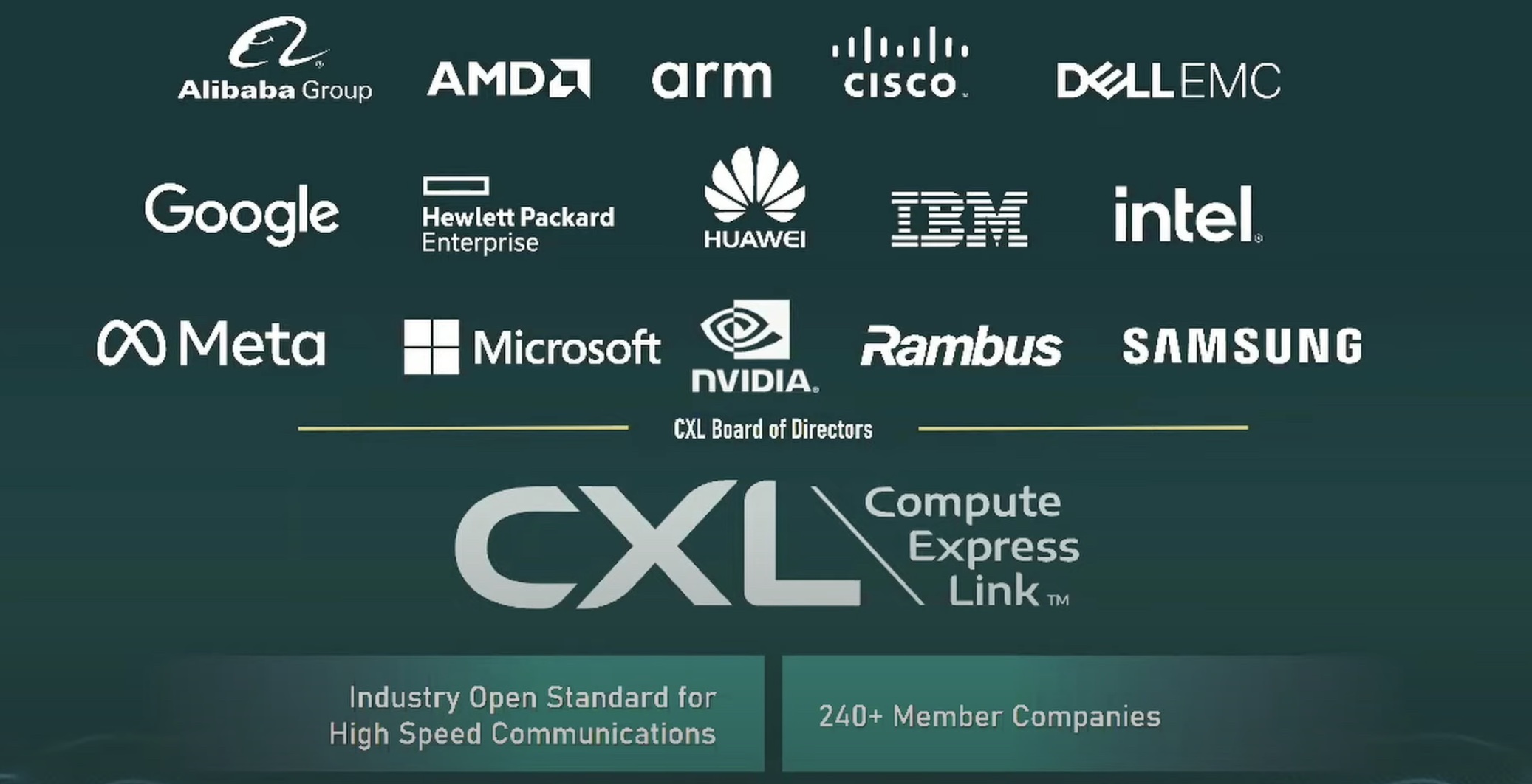

CXL’s end goal is to completely redefine the data center to allow for greater flexibility in design. The standard is backed by every major manufacturer you can think of: from hardware and CPU manufacturers such as Intel, Micron, and NVIDIA, to software and platform providers such as IBM, Google, and Meta.

In 2021 it was announced that Micron had stopped developing 3D XPoint memory in favor of focusing on the CXL future. This looks to have been the right decision, as the presentation that Micron gave showed that the potential of CXL will greatly surpass what had been hoped for from the 3D XPoint project.

Micron’s presentation highlighted the fact that CPU designs have been placing an emphasis on higher core counts, and that chip and motherboard designs mean that standard memory deployments have not been able to keep up from a bandwidth perspective- especially for resource-intensive applications. What applications need is faster access to more memory, and CXL can provide that. This is accomplished because the PCIe bus handles the channels between the processor and memory. CXL is not limited by the number of DIMMs per channel limits of the motherboard – limits that will be more restrictive in the future, owing to new DDR designs.

Using CXL to Solve Memory Management Problems Today

CXL Memory Pooling is a feature that is designed specifically for this problem. If you have a host that needs more memory, you can assign that memory from another host in a dedicated fashion. This CXL bus connectivity will allow ultra-high memory allocations per host that far exceed the main system memory limits.

See the diagram below that shows how CXL 2.0 enables Memory Pooling:

It is true that the memory on CXL will be slower than DIMMs on motherboard, but not as much as you would think (Micron states that CXL-based servers show latency from CPU to CXL memory that is “equivalent to a single NUMA hop”). Micron also highlighted that the workload capacity and bandwidth can be ‘dialed in’ as system performance is observed and fine-tuned over time to take advantage of the different memory ‘tiers.’ There are a number of very memory-hungry applications out there, such as AI/ML, NLP, and in memory databases that will significantly benefit from this additional system memory capacity and bandwidth even if it isn’t 100%-line speed compared to on-board DIMMs.

Conclusion

There is a lot to like about CXL, and Micron showed the performance advantages with both the existing hardware and CXL’s future. Additionally, there is a TCO benefit to only upgrading the memory allocation via a CXL-compatible device compared to buying a whole new server. In the post-CXL 3.0 future, this kind of flexibility will extend from just memory to basically any system component you can think of – CPUs, GPUs, storage, and more.

While the standard has been around for a while (remember, CXL 1.0 was announced in 2019), it is only in the last year or so that we have seen real life examples of the technology in action. Micron’s presentation showed the practical benefits CXL will bring to datacenters. If you would like to keep up with what Micron is doing on this front, check out their server tech landing page.

Micron Technology’s Ryan Baxter also guested on our Utilizing CXL Podcast, part of our Utilizing Tech series. Watch his episode to hear more on Micron’s CXL efforts.