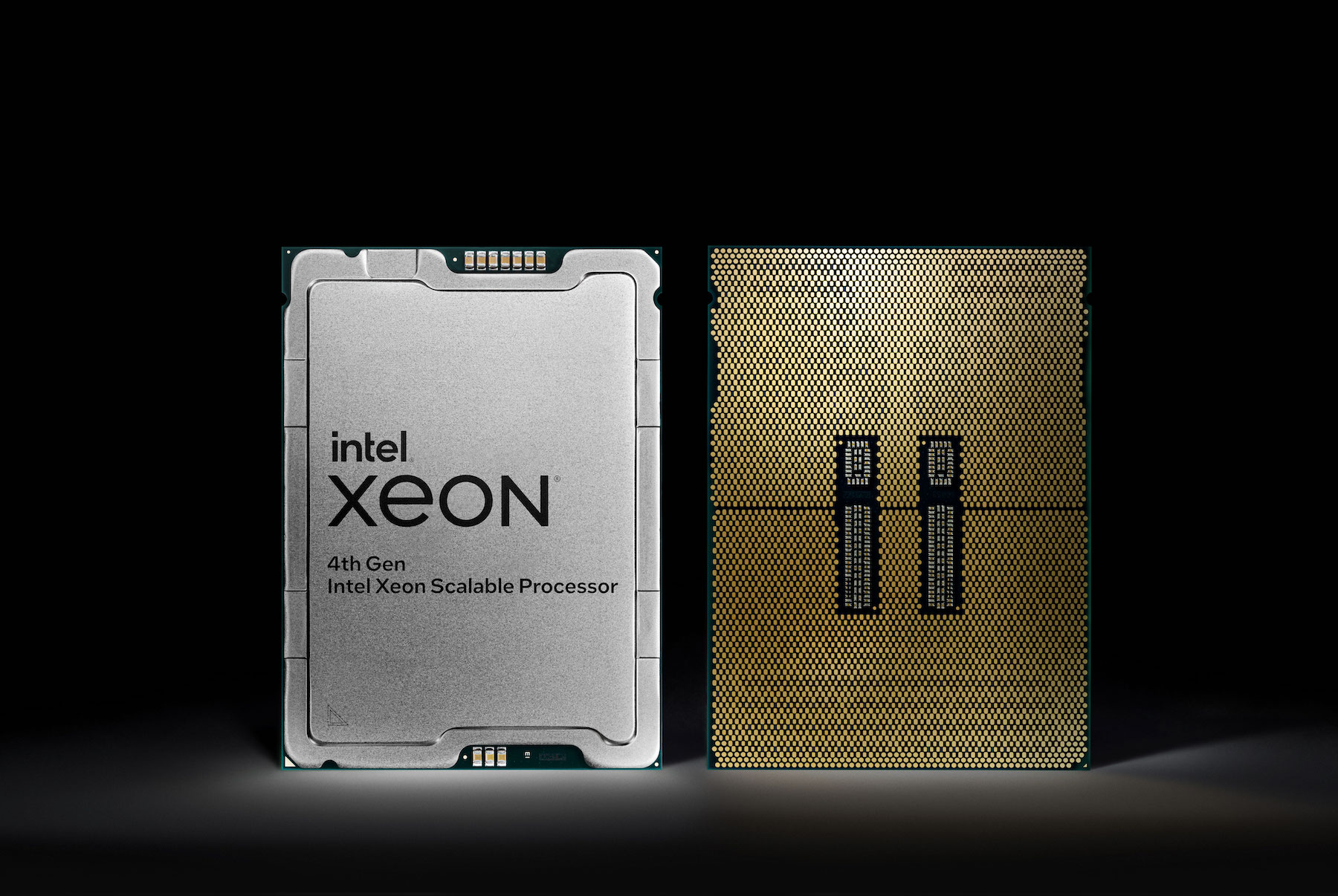

AI processing is appearing everywhere, running on just about any kind of infrastructure, from the cloud to the edge to end-user devices. Although we might think AI processing requires massive centralized resources, this is not necessarily the case. Deep learning training might need centralized resources, but the topic goes way beyond this, and it is likely that most production applications will use CPUs to process data in-place. Simpler machine learning applications don’t need specialized accelerators and Intel has been building specialized hardware support into their processors for a decade. DL Boost on Xeon is competitive with discrete GPUs thanks to specialized instructions and optimized software libraries.

Three Questions

- How long will it take for a conversational AI to pass the Turing test and fool an average person?

- Is it possible to create a truly unbiased AI?

- How small can ML get? Will we have ML-powered household appliances? Toys? Disposable devices?

Guests and Hosts

- Eric Gardner, Director of AI Marketing at Intel. Connect with Eric on LinkedIn or on Twitter at @DataEric

- Chris Grundemann, Gigaom Analyst and Managing Director of Grundemann Technology Solutions. Connect with Chris on ChrisGrundemann.com and on Twitter at @ChrisGrundemann

- Stephen Foskett, Publisher of Gestalt IT and Organizer of Tech Field Day. Find Stephen’s writing at GestaltIT.com and on Twitter at @SFoskett

For your weekly dose of Utilizing AI, subscribe to our podcast on your favorite podcast app through Anchor FM and watch more Utilizing AI podcast videos on the dedicated website https://utilizing-ai.com/