There is a long-standing gulf between developers and operations, let alone infrastructure, and this is made worse by the scale and limitations of edge computing. This episode of Utilizing Edge features Carl Moberg of Avassa discussing the application-first mindset of developers with Brian Chambers and Stephen Foskett. As we’ve been discussing, it’s critical to standardize infrastructure to make them supportable at the edge, yet we also must make platforms that are attractive to application owners.

- Stephen Foskett, Publisher of Gestalt IT and Organizer of Tech Field Day. Find Stephen’s writing at GestaltIT.com and on Twitter at @SFoskett.

- Brian Chambers, Technologist and Chief Architect at Chick-fil-A. Connect with Brian on LinkedIn and Twitter. Read his blog on Substack.

- Carl Moberg, CTO and Cofounder, Avassa. You can connect with Carl on LinkedIn and find out more on their website.

For your weekly dose of Utilizing Edge, subscribe to our podcast on your favorite podcast app through Anchor FM and check out more Utilizing Tech podcast episodes on the dedicated website, https://utilizingtech.com/.

Key Points about Edge Application Management

- Stephen emphasizes the importance of adopting an application-first mindset in IT infrastructure, ensuring that the infrastructure supports diverse applications and is optimized for users. He expresses concerns about the challenge of achieving this approach.

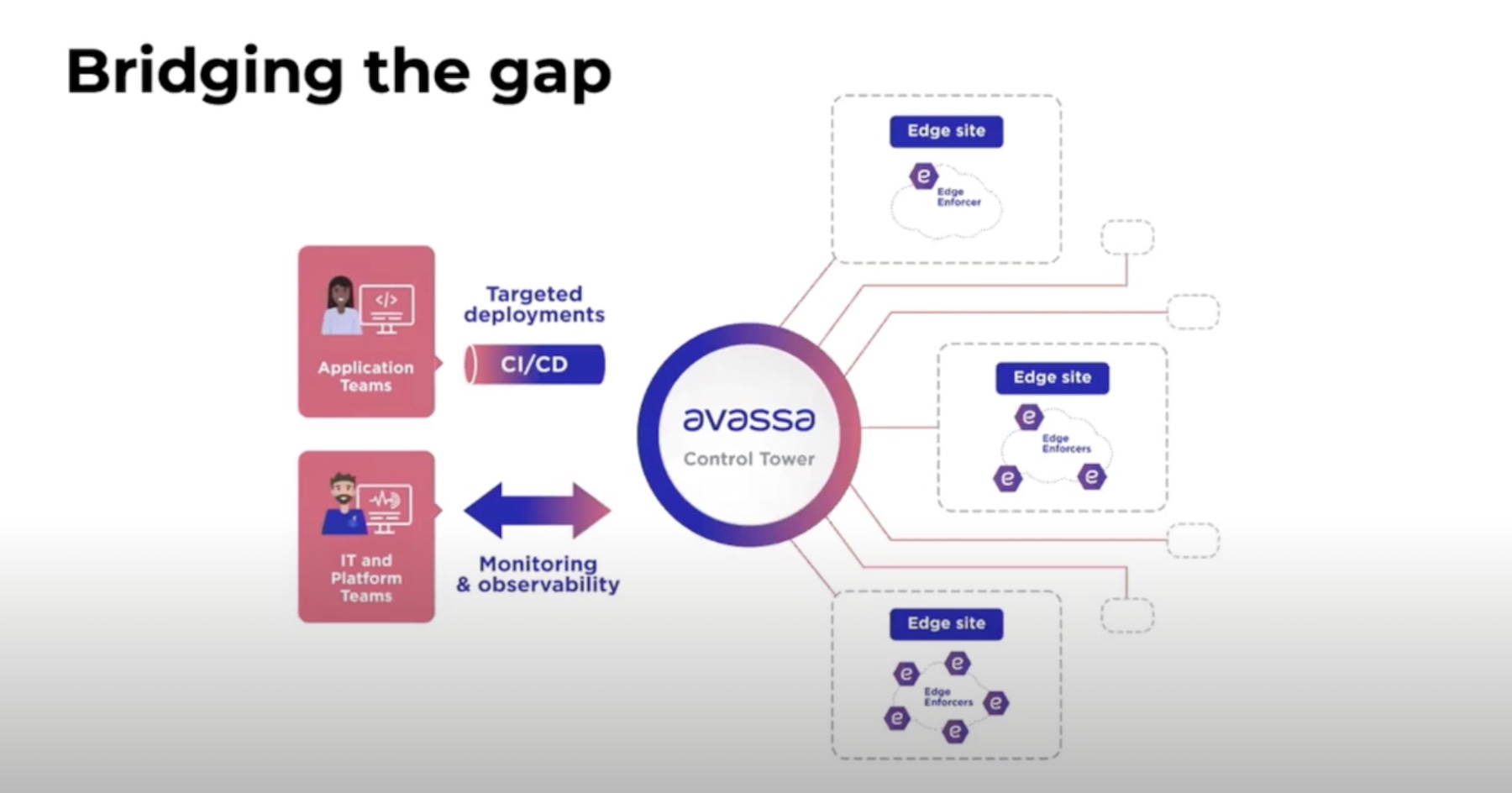

- Carl discusses the need for systems that prioritize applications as the central abstraction, enabling easy integration with application teams and their tooling. He emphasizes the importance of building supporting infrastructure that revolves around applications for better manageability and observability.

- Stephen emphasizes the need for an application-first approach in IT infrastructure, highlighting the common tendency to prioritize infrastructure over applications. He discusses the importance of supporting diverse applications, optimizing for users, and attracting developers and application owners to ensure standardization.

- Carl supports the idea of centralizing applications as the primary abstraction in computing platforms. He advocates for systems that prioritize applications, enabling seamless integration with application teams and their tooling. He suggests focusing on building supporting infrastructure that revolves around applications to enhance manageability and observability.

- Brian asks about the key components that make a solution developer-friendly and focused on application-centric needs, highlighting the importance of considering the requirements and preferences of software engineers and application-centric individuals when designing edge solutions.

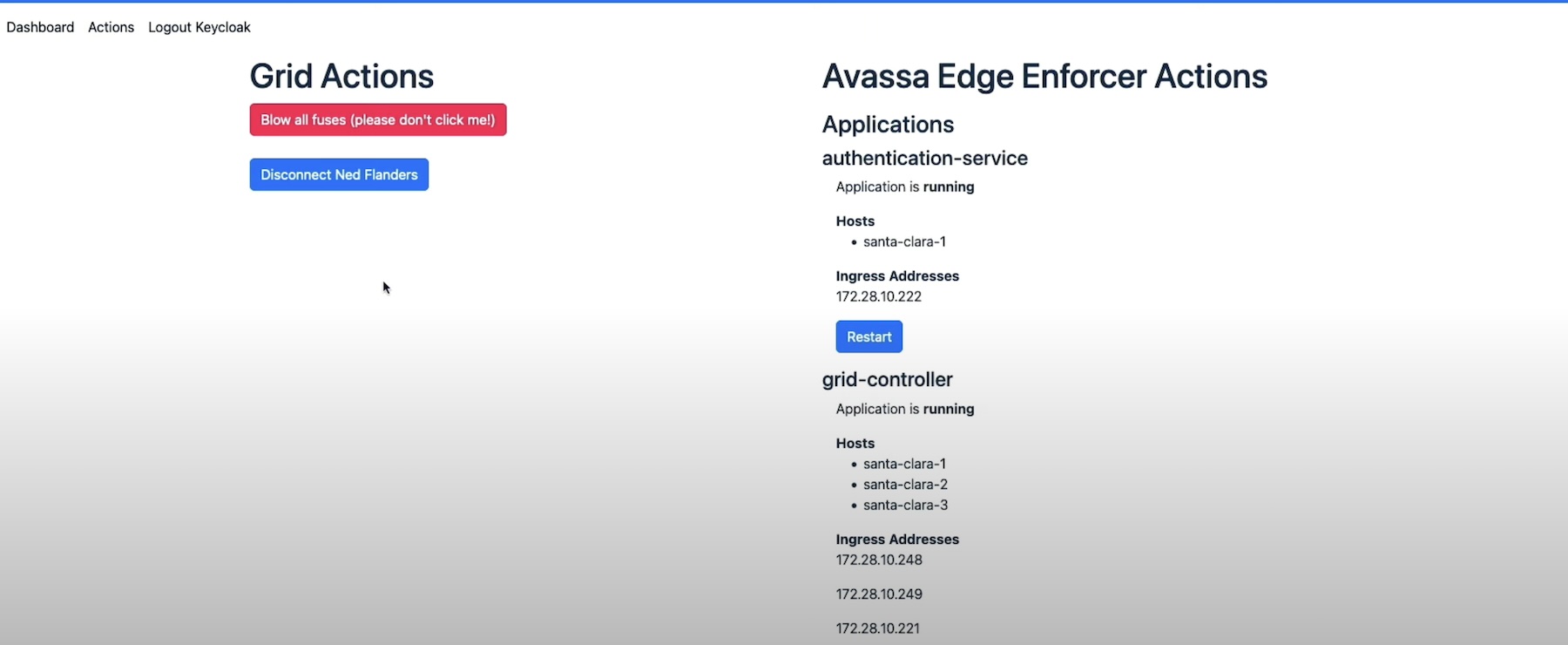

- Carl suggests that starting with the application as the central object in the system is crucial for a developer-friendly experience. He emphasizes the need for systems to provide information about which applications are running and their locations, as well as their health status and performance, to facilitate effective management and monitoring.

- Carl also discusses the potential benefits of exposing certain aspects of the underlying infrastructure to application teams, such as the availability of GPUs or attached cameras, to enable informed placement decisions and enhance the application team’s understanding of the infrastructure.

- Stephen points out the challenge of balancing standardization with application-centric infrastructure at the edge. While standardization is necessary for managing multiple locations, it is essential to find a way to describe the infrastructure needs of application owners in a standardized manner that aligns with deployment requirements.

- Carl acknowledges the existing efforts in the IT industry to establish standards for infrastructure description, such as SM BIOS, DMI, and Redfish. However, he expresses uncertainty about whether a single, comprehensive standard will emerge. He discusses the pragmatic approach of building on top of existing frameworks and using data models to address the lack of structure, but acknowledges the complexity and the need for further exploration.

- Brian highlights the importance of providing reasonable abstractions and APIs for developers to focus on building applications without having to worry about infrastructure details. While some specific applications may require knowledge of the underlying infrastructure, the trend is towards minimizing the level of concern and surfacing it only when necessary, such as in the case of GPUs.

- Carl agrees with the idea of finding a middle ground where infrastructure details are not excessively required, but certain labels or indicators, such as the presence of external devices or specific capabilities like GPUs, can be used. However, he acknowledges the challenge of naming and taxonomy management in complex edge container environments, suggesting a combination of human input and careful use of automated matching patterns.

- Stephen points out the fundamental limitations of the edge, including customization, supportability, and hardware constraints. He emphasizes the need to work within these limitations and describes the “second application problem,” where organizations underestimate the future infrastructure requirements of edge deployments, leading to operational challenges and inefficiencies.

- Brian discusses the art of sizing edge infrastructure, balancing the initial investment with the anticipation of future use cases. He highlights the importance of not overinvesting while also ensuring the platform can support multiple workloads. He mentions the hardware lifecycle and the need to consider business plans and hardware longevity at the edge. The conversation then shifts to the concept of scaling at the edge and Carl’s perspective on it.

- Carl raises the question of over-investing and its importance in edge deployments, considering financial investment and physical space constraints. He also inquires about the possibility of declaring a project a failure and writing off the expenses in the planning horizon.

- Brian acknowledges the possibility of failure and explains their approach to investment, making it big enough to accommodate potential success but not risking a complete disaster if it failed. He highlights the flexibility they maintained in their hardware investment and the ability to refresh or scale up if needed. They consider factors like physical space and network capacity in scaling infrastructure and plan for resource consumption and future planning based on analysis and refresh cycles.

- Stephen and Brian discuss the hard limits and constraints faced in edge deployments, such as limited switch ports and scalability issues, which are unfamiliar to data center and cloud environments.

- Carl emphasizes the importance of building a developer-friendly and application-centric infrastructure by starting from the API level and reusing existing components and abstractions, like application structure and container registries. He also highlights the need to give application-centric users the ability to define where and under what circumstances their applications should run.

- Carl suggests that the conversation should revolve around the abstractions of applications and their deployment locations, which can have configuration aspects like specific hardware requirements or geographical constraints. He believes that focusing on these abstractions and making them easy to use and understand can be a game-changer in the industry.