This week, Intel kicked off their next-generation “Xeon Scalable” server CPU line, abandoning the old E5 and E7 names for a “metal” theme. Unlike AMD’s Threadripper and Epyc launches, there are no surprises in the new Xeon lineup: The Skylake Xeon looks a lot like the Skylake high-end desktop parts Intel announced last month. Apart from core count, the news focuses on Intel’s inter-CPU mesh, Omni-Path Fabric interconnect, and AVX-512 instruction set. And, of course, head-to-head comparison with AMD!

Ride the Lightning

Intel is really hyping this launch, calling it the “Biggest Data Center Platform Advancement in a Decade” in slide after slide. But is it really? After all, apart from reshuffling the model names, what is Intel really announcing here but a new “tock” in their CPU release clock?

Intel’s next-generation Xeon CPU’s are aligned with four metals (instead of “E5” and “E7”)

Many of the advances seen in Xeon Scalable (also known as Skylake-SP) come straight from Skylake-X and plain old Skylake. And this isn’t a bad thing: Skylake was an impressive advance for Intel, and Skylake-X stands a decent chance of fending off AMD’s Ryzen/Threadripper assault.

Truthfully, there are some major advances here. Chief among these is the new inter-CPU mesh, which enhances on-chip communication. AnandTech does a wonderful job outlining the shortcomings of Intel’s old ring bus, but the raging feedback is already started: The new mesh is slower then the old dual ring and latency is added as data hops between cores. But AMD won’t be squawking about this, since Intel’s mesh is much faster than inter-die communication inside an Epyc chip.

Like Skylake-X (and unlike any desktop chip), Skylake-SP has a shuffled on-chip caching scheme. Rather than bulking up the L3 cache as in most previous x86 processors, these chips have a smaller non-inclusive L3 cache and larger L2. Intel apparently sees a performance advantage in this arrangement for many-core processors.

Among the Living

Another new thing (for Xeon) is the optional Omni-Path Fabric interconnect for direct CPU to network communication. Pioneered in Xeon Phi and available for a few years now, Omni-Path exposes a PCIe 3.0 x16 connection via an interface directly on the CPU itself. This can be used for up to 100 Gbps networking for high-performance computing.

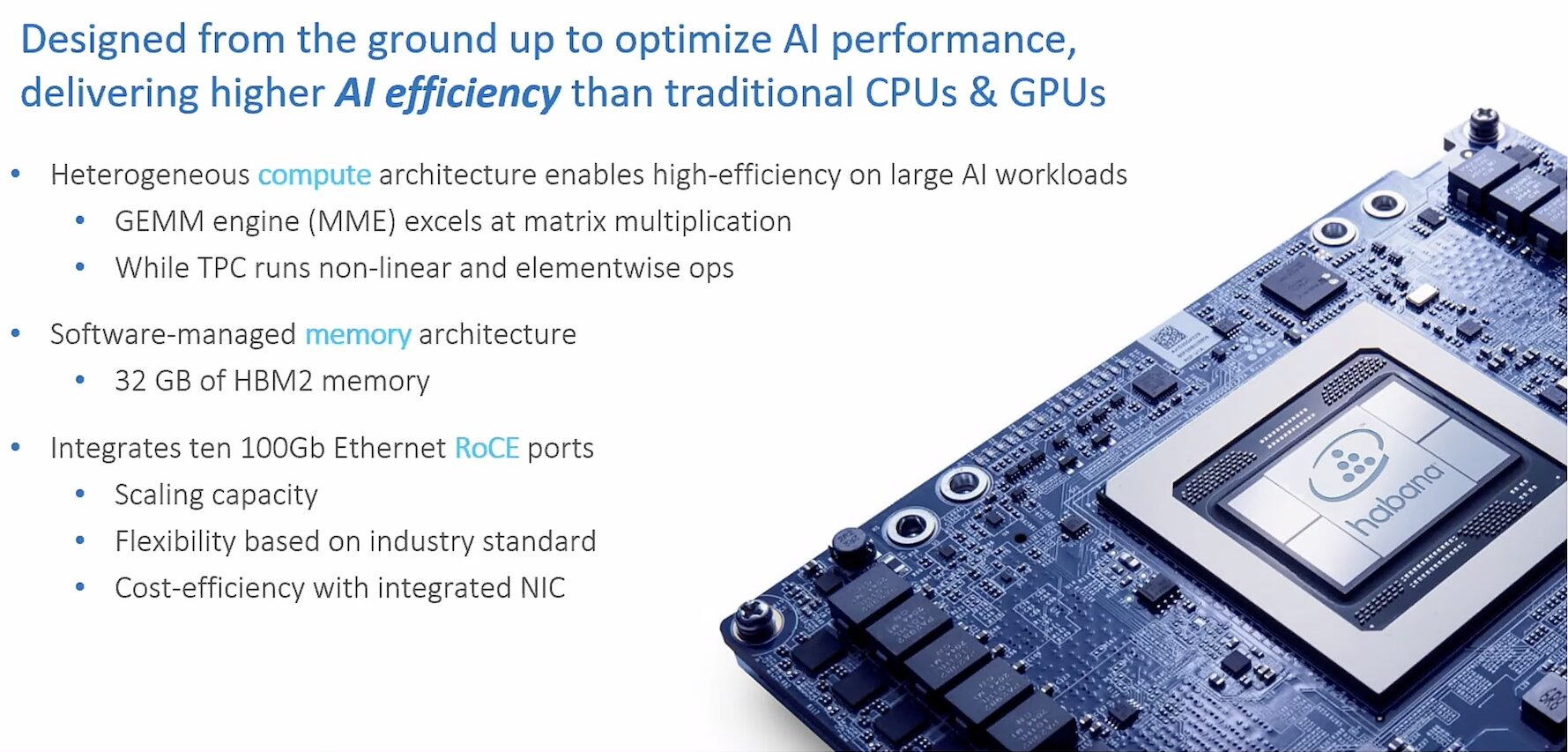

Intel’s AVX-512 instruction set can accelerate certain applications

As expected, the Skylake-SP implement the AVX-512 SIMD instruction set first seen in the Knights Landing Xeon Phi processors. These 512-bit vector extensions enable faster processing for certain applications, including some machine learning tasks. Combined with Omni-Path, this should continue Intel’s strong showing in HPC alongside Xeon Phi. But Skylake-X and Skylake-SP don’t support all the same AVX-512 instructions as Knights Landing and the next-generation Knights Mill, making for a confusing picture.

Less attention was paid to the “Purley” platform which supports these new Xeon CPUs. Among the cool features of the platform is the integrated Intel X722 Ethernet controller, which supports 4x 10 Gb Ethernet connections with iWARP RDMA. Microsoft is chiming in with an impressive test of the new Xeon, Optane SSD, and integrated 10 Gb iWARP RDMA Ethernet, blasting out 80 microsecond reads.

Purley also introduces an astonishingly-large new socket, known as LGA 3647 or “Socket P”. That’s right: We’ve got 3,647 pins (up from 2,011 previously) in a massive array with clips and screws to keep everything in place. And there’s a provision for that big Omni-Path cable sticking out one side too. Now imagine 8 of these sockets on a motherboard and you’re looking at some serious heavy metal!

Peace Sells … but Who’s Buying?

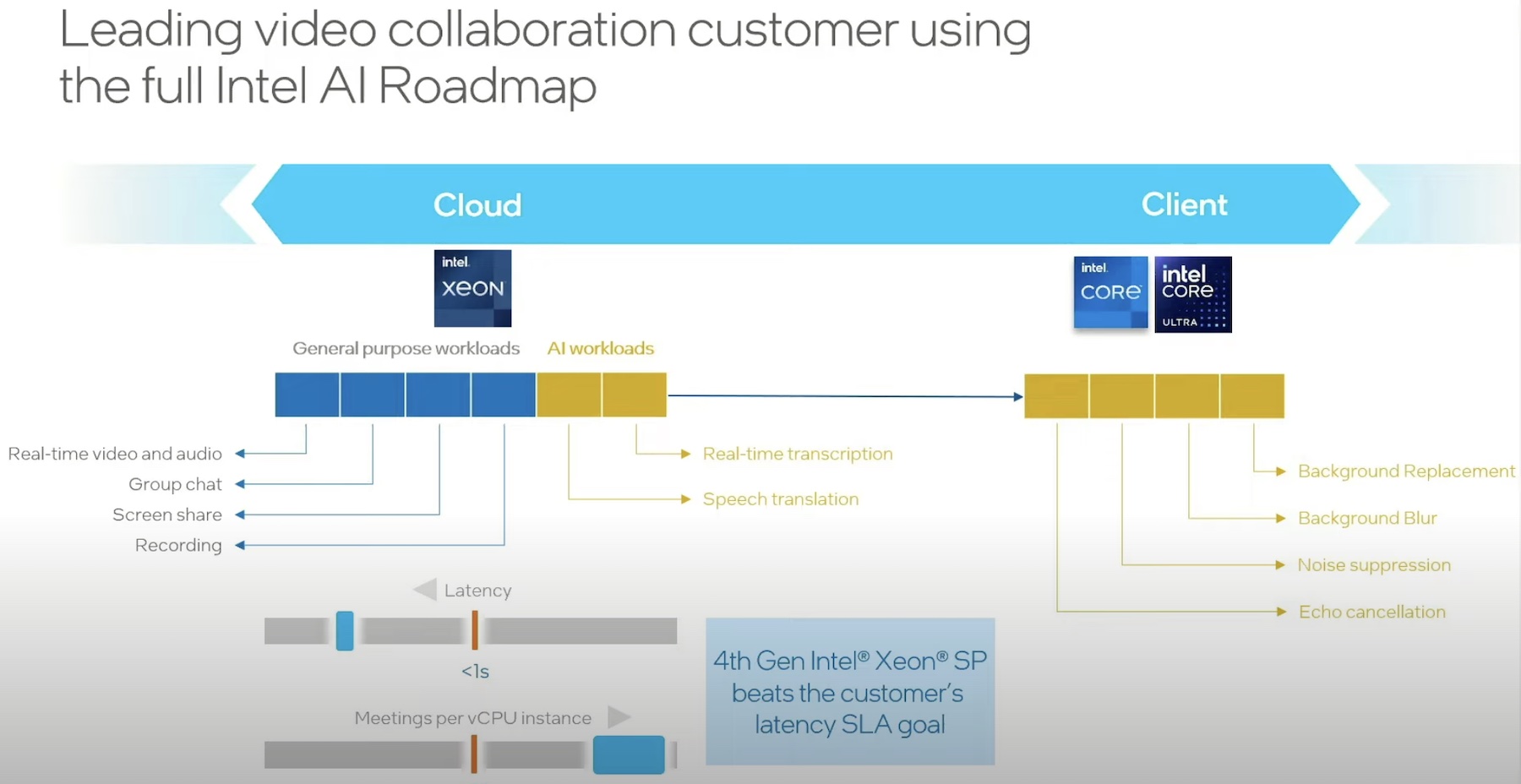

Like practically everything these days, Intel is pitching Xeon Scalable as a panacea for high-performance computing (HPC) and machine learning (ML). And the launch even featured Amazon claiming over 100x improvement for some tasks!

No doubt, Xeon Scalable will be useful in HPC as well as in the data center. And the Omni-Path chips might find themselves used alongside Xeon Phi. But AMD is likely to see some uptake in HPC as well. Although AMD doesn’t offer 8-socket servers, most of today’s high-end computing is scalable using software and third-party interconnects, so supercomputers can be built from uni-processor systems too.

Then there’s the machine learning question. Do customers really use Xeon CPUs for machine learning? Most are rapidly making the switch to GPGPU, with Nvidia leading the way with Tesla. Intel is trying to strike back, first with Xeon Phi and now with Xeon Scalable. Although AVX-512 is attractive for certain applications, many ML tasks are better suited for massively parallel sets of shorter vector units. It is likely that some combination of very-long and very-parallel processing will emerge as the dominant machine learning platform.

Of course, many of the benefits of Xeon Scalable are simply a result of high clock speeds and multiple cores. Unlike previous Xeon lineups, customers can now buy processors with just a few cores for many-socket servers as well as processors with many cores for single- or dual-processor servers. This opens entirely new opportunities, especially in light of per-core or per-socket software licensing. And Intel is still the only game in town for 8-socket x86 servers.

Master of Puppets

Intel did most of what they needed to do with the Xeon Scalable launch. There’s enough of a speed boost to get noticed, some interesting new options for server builds, and some cool low-level features that are going to matter in HPC and ML. But AMD is coming on strong, and many-core ARM servers are lurking in the shadows. This may be the biggest datacenter platform in a decade for Intel but it’s not a massive advancement overall.