Many of the discussions of Apple moving from x86 to ARM seem to be focused on how they’ll manage that migration. Less has been said about how Intel found itself going from the savior of Mac performance and efficiency in 2005 to the cause of product delays and stagnating innovation today. We break down the last 15 years of Intel’s x86 history, which saw a once-unstoppable march of technical progress grind to a halt, and signaling to a lot of industry observers that Moore’s Law had indeed ended. Aside from losing Apple as a major customer, where does Intel go from here? And will Intel look to the heady performance advantage it had in the past, or look to make a more well-rounded play for the future?

Transcript of Checksum Episode 7: What is Intel’s x86 Future?

Bloomberg’s resident Apple whisperer, Mark Gurman, reported last week that Apple was set to announce the transition of Macs from x86 to their own ARM-based designs. I have no reason to doubt a journalist with such a good history, so let’s assume that it gets announced right about the time this video gets published.

Of course, this is kind of old hat for Apple at this point. The original Mac was powered by a Motorola 68000 processor, which eventually was replaced by PowerPC. That architecture stalled out with the G5 CPU, which couldn’t meet the thermal requirements for mobile or emerging multi-core systems. In 2005, Apple announced it was switching. Now that I think about it, I guess Apple is really bad at betting on CPU architectures…

A lot of the focus on this announcement will be about Apple’s remarkable emergence as a high-end chip maker, scaling designs for then low power ARM chips into their A-series of chips that have rivaled or surpassed Intel processors on multicore benchmarks for years now.

But the move by Apple speaks so much about the current situation Intel finds themselves in. Did Apple really out-innovate them, or were they just aiming at a stationary target?

Let’s go back to that moment in 2005 when Apple announced the transition, to see where Intel was as a company. Intel had spent the first half of the decade in a pretty pitched battle with AMD on the x86 side. Intel had an edge on the mobile side with its Pentium-M architecture, and its highly marketable Centrino platforms helped standardize Wi-Fi on laptops. But commitment to the power-hungry NetBurst architecture on desktop and server meant that they were seeing AMD trade blows with them on absolute performance, while often losing out on efficiency. While Intel still maintained a healthy marketshare lead in both categories, Operons were starting to carve out a niche for themselves in the HPC space, having been used in leading supercomputers at that time.

While Intel couldn’t always win on a technical level, they were quite adept at using “creative” marketing and strategies to get their chips in their customers’ hands.

While Apple showed off Intel running what was then called OS X on a Pentium 4 test system, the first products using Intel were using the Core microarchitecture. This architecture, based on the P6 architecture from the original Pentium line, would help lead the company into over a decade of innovation, growth, and marketplace dominance.

For much of this time, Intel’s strategy for chip families was called Tick Tock, formalizing the performance and efficiency increases predicted by Moore’s Law into a marketable bit of strategy. Each Tick by Intel would see them introduce a new chip family that offered more performance per clock, faster memory, and increased cache. The Tock would follow in a year or two, generally taking that chip architecture and moving it to a smaller fabrication process, which would generally increase efficiency and allow for higher clock speeds. Chip fabrication is an extraordinarily expensive business, and each move to a new fabrication size requires chip makers to refit, if not effectively rebuild factories, for billions of dollars. So it is remarkable to see Intel, from 2005 to 2015, go from making 90nm chips to 14nm, over the course of 5 process shifts. This pace allowed them to add more cores, more frequency, and save battery life.

Of course, not all of Intel’s businesses were flourishing at this time. Intel has been trying and failing to do mobile since time immemorial. In the mid-2000s, they had some moderate success making ARM-based XScale chips for Windows mobile devices, but ultimately the company decided to exit that market in 2006 by selling its PXA lineup to Mavell. Having exited the mobile market just as smartphones were about to eat the world, Intel tried to pivot it’s Atom processors to address the gaping hole in their lineup. While Atom had carved out a niche in dominating the Netbook space, Intel wasn’t able to quickly adapt the line to go into the mobile market, and always seemed to be playing catchup. While it saw some OEMs like Lenovo and Asus making Android devices in the early 2010s, these often had compatibility issues with Android apps meant for ARM, and could never hit the same performance and efficiency metrics. In that time Apple, Samsung, and Huawei all started designing their own ARM chips in-house, leaving Intel to compete with Qualcomm on the high end and Mediatek on the low end. In an untenable position, Intel canceled its next-generation Atom chips in 2016 and effectively exited the mobile market.

Withdrawing from the next giant market for chips was bad enough, which meant it was the perfect time for Intel’s vaunted Tick Tock strategy to completely fall apart in its traditionally dominant market of laptops and desktops. The release of the 14nm Broadwell chip family in 2015 was the latest Tok in Intel’s semiconductor march, providing a process shrink from the Ivy Bridge architecture it had been producing since 2012. Since then, Intel has effectively been waiting on another Tok cycle, continuing to reiterate and refine that process. They’ve managed to eke out successive advances in the venerable process, which has hit up to 18 cores and capable of achieving 5GHz in certain lineups.

People have been speculating when Moore’s Law would come to an end for a while, and Intel seemed to be making the case that it was dead as a doornail for the last half-decade. Instead of having dependable cycles of increased efficiency and better performance, each successive generation felt painfully iterative. Given the lead that Intel had built for themselves over rivals like AMD, this didn’t see an immediate impact. But the inability to move off a 14nm process saw its Red rival eventually release its Ryzen series of processors that offered more cores and eventually more performance in some SKUs. But more important, ARM processors have taken up Intel’s brutal march of advancement, with Apple leading the way thanks to their tight hardware and software integration.

In that vein, Apple’s relationship with Intel has been a little frosty since making the switch in 2005. Apple seems to have never been overly thrilled with Intel’s integrated graphics, turning to Nvidia chipset and integrated graphics in 2009. In a remarkable coincidence, in 2009, Intel announced its chipset license with Nvidia didn’t cover the Core series of CPUs, resulting in Nvidia ending chipset development in 2009. Apple released its first in-house designed chip in 2010. While Apple’s iOS devices were seeing annual leaps in performance on their ARM designs, Mac’s often languished through multiple years without upgrades, to the point where they had to hold a press event in 2017 to announce that they hadn’t forgotten how to make desktops.

So with lagging innovation, what better time for the world to find out that Intel’s entire line of Core chips had inherent vulnerabilities! While Spectre and Meltdown affected other architectures than just Intel’s, the disclosure of the vulnerabilities in 2018 did nothing to bolster Intel’s emerging image. Given the years of only modest performance gains with successive generations, the potential mitigations of these vulnerabilities that would introduce noticeable performance penalties were especially worrying. Given the root causes of these vulnerabilities could fundamentally not be simply patched with software, it seemed to suggest that maybe Intel’s whole x86 edifice was indeed crumbling.

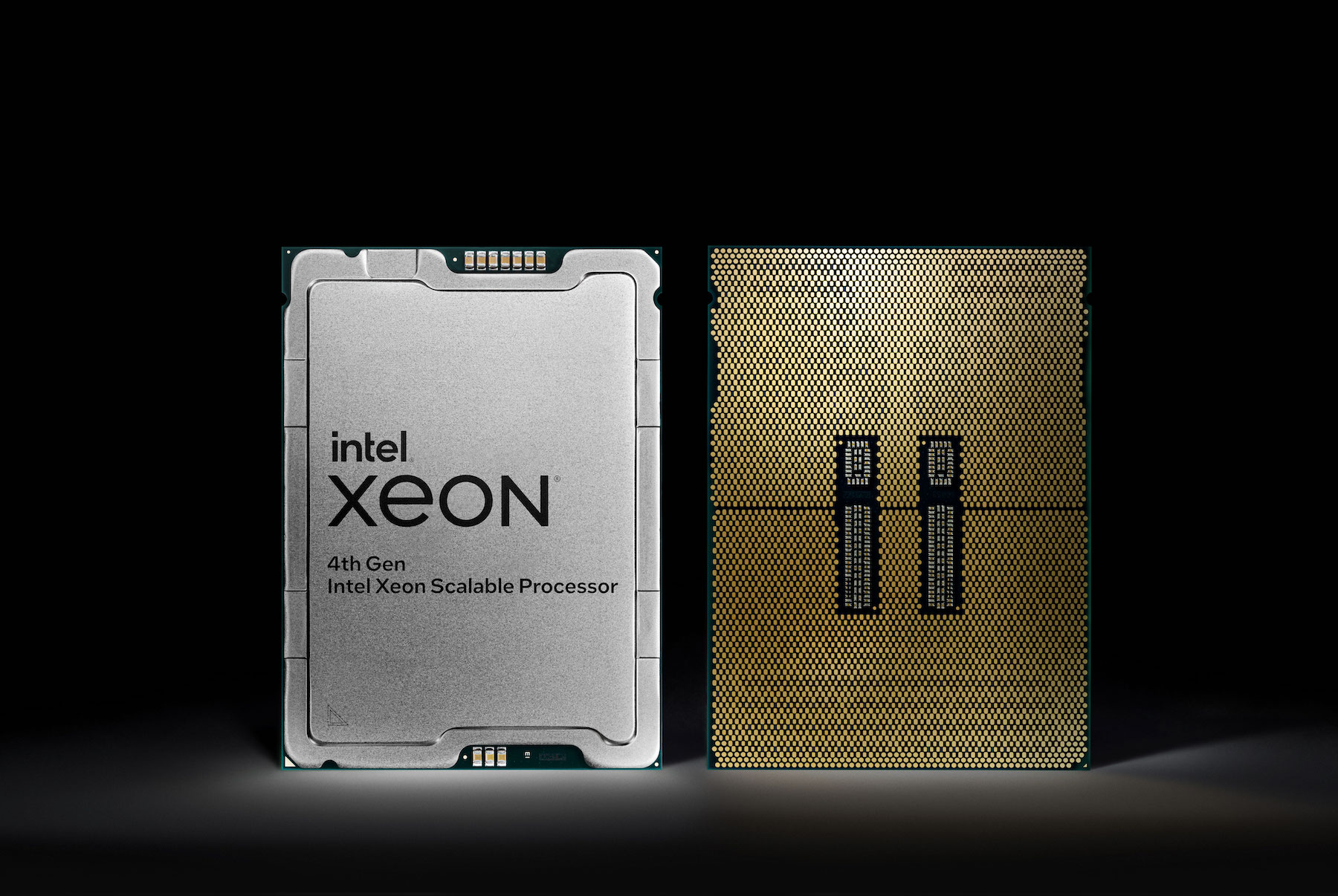

So where does Intel stand in 2020? Well, the company has further distanced itself from the mobile space, selling off its mobile modem business to Apple in July 2019. It has started to actually ship 10nm chips with its Ice Lake family, but these 10th generation chips are still being sold alongside new 14nm lines, and we’ve yet to see Xeons built on that process.

Intel’s new strategy seems to be, at least for now, conceding its ability to out-process or engineer the x86 stack itself to superior efficiency and performance, but to begin to make a better platform play. The company’s Xe graphics cards are finally coming to market, RIP Larrabee, and should make for a more well-rounded platform. Intel also recently announced that its upcoming Tiger Lake CPU line will feature its Control-flow Enforcement, or CET technology, to better stop malware on a silicon level. And Intel recently announced the acquisition of the NIC maker Rivet networks. These combine to signal that Intel is looking to make a stronger play beyond just the best possible CPU performance (which it can no longer guarantee). But making long term investments in security, connectivity, and graphics, it’s shaping up to be a very different Intel than the one that dominated in the mid-2000s. And maybe pointing to a future that doesn’t need Moore’s law to be successful.