Have all your wildest dreams come true? Have you found the meaning of life? Has a wave of serenity that puts you in tune with the eternal rhythm of the universe overwhelmed you, making all previous concerns look petty by comparison?

No? Then you must have never used hyperconverged infrastructure before!

That may be an exaggeration, but as the new hotness in the enterprise for a while (so I guess its really the old hotness), HCI certainly seems to make a lot of lofty claims. In many ways, HCI is a response to the supposed simplicity of the cloud. For organizations not ready or impractical to move to the cloud, HCI is a great way to simplify provisioning and managing physical infrastructure.

But in the end, HCI is just about virtualization. You’re using whatever HCI solution you choose to roll out the same VMs that you saw in traditional infrastructure. In that, you eliminate the need for a large physical SAN, and the infrastructure needs surrounding that. But you’re not so much replacing the SAN, as moving it. This isn’t a rethinking of virtualization architecture, rather a refinement of it. It still has all the drawbacks of the rather kludgy setup virtualization has found itself as it developed in the enterprise.

Scale Computing works within the HCI space, but instead of just refining how converged their solution is, they’re also rethinking the infrastructure as a whole. They designed their solution from the ground up to be an implementation of virtualization how you would want it to be, not just an extension of the current status quo.

In doing this, they decided on vertical integration. They went with this because they found the current virtualization solutions to be neither inexpensive, or simple, and that adding on features made the whole system more complex, and added licensing costs. By always knowing the hardware the system was using, it allowed them to build scale natively into the solution. This extends to even mix and matching old hardware. This eliminates the need for some upgrade, and makes it easier to prevent siloing of resources, as is common in more traditional deployments.

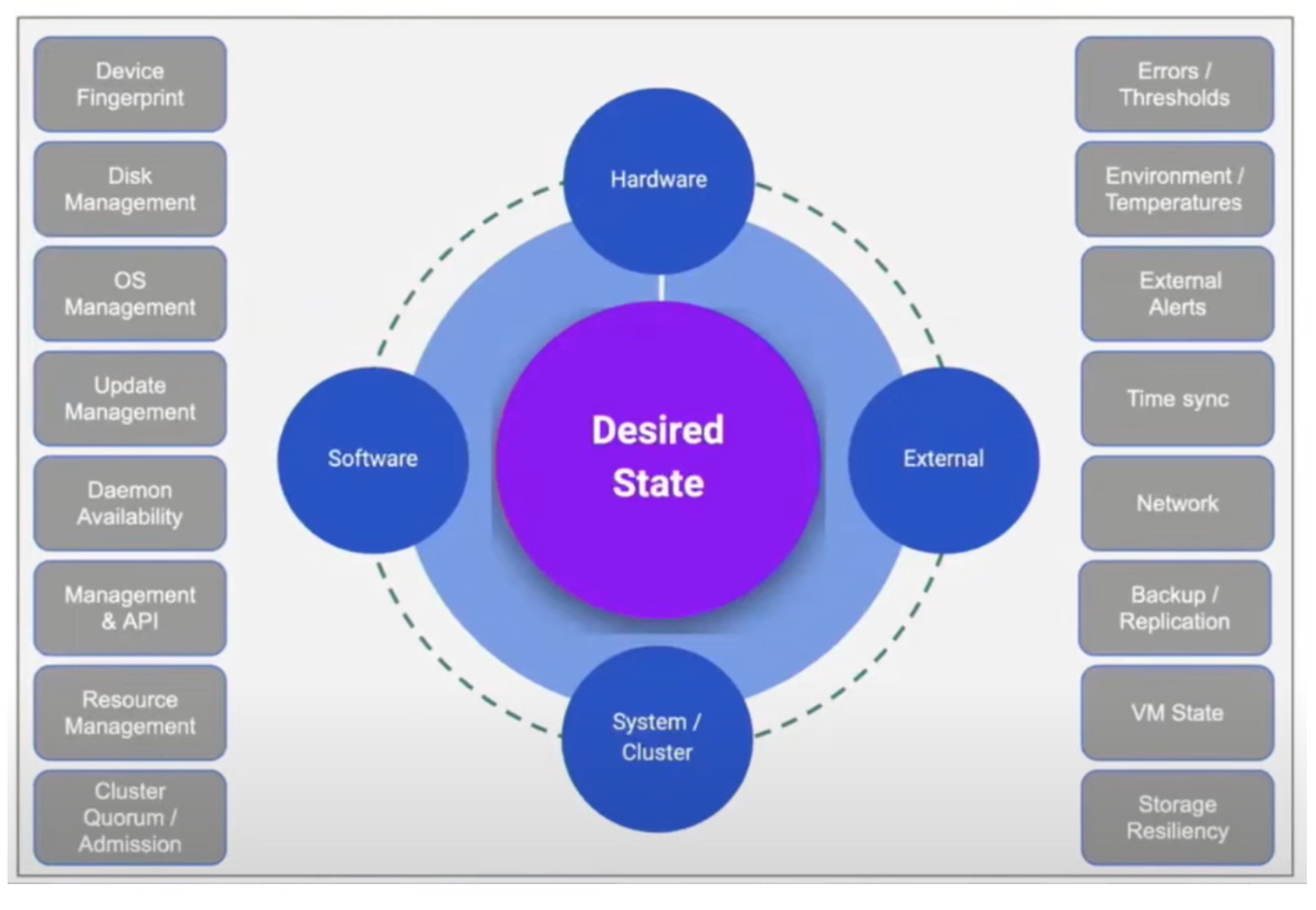

Scale Computing will be more than happy to tell you on a technical level how this is accomplished. The essence is that they’re really frugal with resources. They saw that maintaining a SAN or VSA was really resource intensive, so they decided to get rid of it. The key to this is their HyperCore software, preloaded out of the box on each node. This is what enables Scale to flatten a lot of the complexity in traditional HCI. HyperCore includes their own block-level data management system. They’re able to create storage pools on the block level, and then hand them off to any other VMs in the cluster. It offers the flexibility of a SAN without the onerous resource requirements.

Because their solution emphasizes scale (hence the name), simplicity, and cost-effectiveness, they naturally found their focus in the SMB and mid-market sphere. Their frugal design principle allowed them to get scalable HCI with minimal system requirements. Essentially, but eliminating the complicated dependency pyramid that traditional virtualization requires, they’re able to run a complete cluster on what other HCI companies use just for their virtualization requirements. This allows them to offer HCI simplicity, hitting at least 29,000 IOPS continually, at a price point viable for small businesses, but with the ability to scale as they grow. This is made even easier because you can seamlessly mix hardware, as mentioned earlier. And when I say simple, I mean it. Here’s a four year old creating a VM.

https://youtu.be/UgBQZ-TVvs0

The actual setup of their hardware can be done is as little as thirty minutes. The process is remarkably simply. Put your 1U nodes in the rack, and cable them up. Hook up the KVM, and input your IP addresses for the nodes. When your last nodes has it’s IP address, it will initialize, with the UI available in the web browser within fifteen minutes, ready to do some virtualizing. It supports all the amenities you would want in a modern system. Granular control of system resources, algorithmically smart load balancing across all current machines, native flash tiering for prioritized performance, and robust failover support.

HCI reminds me a lot of the smartphone market. Initially, the idea of the smartphone was to create a smaller, touch optimized version of Windows. They took the way computing looked and miniaturized it, heck there was even a Start menu! It worked for some users, but it didn’t fundamentally change the approach. It punted the issue of complexity and refinement back to the user. Scale Computing is the iPhone moment for HCI. It’s the recognition that the category isn’t just compressed traditional VM architecture, but an opportunity to redefine it.