The Need for Speed

One of the major innovations in the storage industry has undoubtedly been the introduction of 3D XPoint flash memory. Also known as Intel Optane, this new storage media is remarkable for at least two reasons: top-notch performance and strong endurance to write cycles (known as P/E, or program/erase cycles).

It’s not all fun and games, though. Due to Optane’s three-dimensional architecture, complex production processes, limited production compared to mass-produced 3D NAND, and initial investments lead to a much more expensive product.

And in the context of ever-growing capacity consumption, Optane alone bends the curve. Being a 1st-gen product, capacity is limited as only two layers of 3D XPoint are present (compare with 96+ layers for 3D NAND), delivering a maximum of 1.5 TB per SSD drive.

The Need for Capacity

In the current state, Gen-1 Optane cannot deliver on capacity and price. An Optane-only array would be ridiculously expensive and tragically under-dimensioned (a 24 NVMe drive system would provide only 36 TB raw capacity).

QLC 3D NAND is currently the best capacity-oriented flash media available. QLC stands for quadruple layer cell, where each memory cell can store four bits of information. QLC effectively commoditizes flash from a capacity standpoint.

The price to pay is reduced performance and endurance. More layers within a memory cell mean more writes per cell, which in turn decreases the cell durability (more writes = more wear).

Paradigm Shift with Optane and QLC

Optane and QLC 3D NAND are currently the best possible combination. Using QLC alone eliminates any hopes of supporting performance-oriented workloads. Using Optane alone makes no financial sense. And using TLC 3D NAND doesn’t bring anything new or revolutionary to the table.

The real paradigm shift in terms of storage is to use a very fast tier such as Optane to process write operations immediately, with the highest throughput and lowest latency possible, and to provide a large, affordable capacity tier for data storage.

StorONE AFAn architecture was precisely designed to take advantage of both technologies. By combining Optane and QLC into a single storage plane, organizations benefit from Optane performance at a fraction of its cost while not having to worry about capacity constraints.

A majority of other storage arrays depend on legacy architectures and have included Optane not as a performance tier, but as a cache. Legacy architectures usually rely on RAM for cache operations, being constrained with cache capacity and persistence. Integration of Optane in such architectures often requires trade-offs, it doesn’t fully leverage the strength of Optane, and it increases complexity.

With four Optane drives per AFAn, StorONE eliminates the need for a cache and makes sure that space is no longer a constraint when it comes to accommodating large sustained write operations.

Once enough data has been accumulated on the Optane tier, it gets written to the QLC tier in large data stripes to avoid QLC media wear. It also ensures better distribution of the data across the QLC tier, which again is a contributing factor to reduce media wear.

Transparent, automated tiering is a key attribute of modern storage architectures. It ensures data placement/movement activities are performed optimally without human intervention or without the need for manual data placement rulesets.

Performance

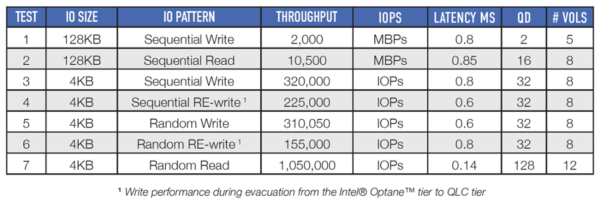

StorONE executed several tests to showcase the performance of their architecture.

Those tests were performed with 3x Optane DC P4800X NVMe drive (750 GB each) and 5x QLC DS-P4320 NVMe drives (7.68 GB each) and give an idea of the throughput to/from the AFAn storage subsystem (all values are client-side IOPS).

The most interesting tests were numbers 4 and 6, where the AFAn was subjected to sustained writes, causing the Optane tier to reach capacity and subsequently triggering data movements from the Optane tier to the QLC tier while still receiving data on the Optane tier.

Those tests demonstrate that even in a critical state of sustained I/O operations, the system is still able to operate between 155,000 to 225,000 IOPS based on the write profile and block size. Latency is still excellent considering that data needs to be constantly moved to QLC, and this despite QLC’s read-oriented performance profile, implying that QLC write latency and IOPS are not its strong side.

In all the test cases above, StorONE delivered between 85% to 100% of the raw performance of physical drives, delivering over one million read IOPS and 300K write IOPS.