In our previous article, we covered how the combination of Optane and QLC flash are game changers that disrupt the storage industry. One important aspect of performance in storage architectures is how fast write operations are.

The Unbearable Weight of Legacy

Beyond the gloss of marketing and branding, some of the storage systems sold in 2020 can trace their roots back to architectures that were designed one or even two decades ago. Those systems were designed at a time when hard disks reigned supreme, storage media was inherently slow, and cache techniques were needed to increase the system performance.

Historically, volatile RAM was used for these caching functions, and storage arrays were equipped with batteries. These would give enough time to flush cached data from the RAM to hard drives in case of a power failure.

Flash Displaces RAM But Reliability Issues Remain

Flash memory partially solved the issue of cache persistency. An additional level of cache was added between RAM and hard drives. With time, caching moved away from expensive and very small RAM capacities into flash.

As flash became mainstream, the industry moved away from SAS to NVMe SSDs. SAS adapters provided disk management features, but NVMe SSDs (such as Optane) are directly connected to the CPU through the PCIe bus.

This lack of disk management features makes NVMe-based caching tricky. Events that would have been handled in the past by the SAS interface are no longer controlled, and a disk failure can cause a kernel crash with a high risk of data loss/data corruption.

Write Cache Types

Because NVMe SSDs can become a rather serious single point of failure in a storage system, cache mechanisms need to be adapted to NVMe drives. When Optane is used as a cache, the potential for data loss needs to be mitigated.

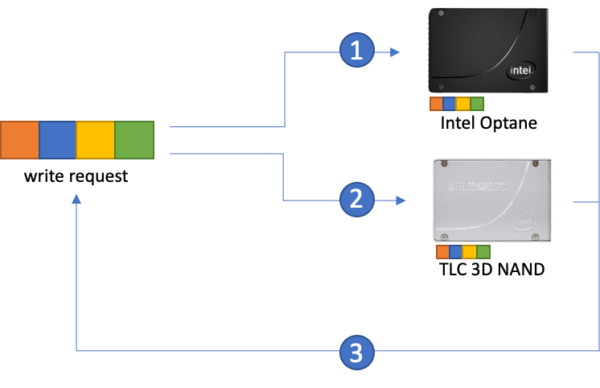

Write-Through Cache

To prevent data loss, write-through caching can be used. In this mechanism (as depicted below), a single write operation occurs to two different media types (steps 1 and 2), after which the write operation is returned as acknowledged in step 3. At the storage array discretion, a tiering mechanism can happen in step 4 to move data away to a colder tier.

Figure 1 – Write-Through Cache Mechanism – Data is written simultaneously to two different media types: the Optane cache and the TLC flash storage (steps 1 and 2 happen in parallel). The write is subsequently acknowledged.

Write-Through Caches have one inconvenience, but a rather annoying one. Because both write operations happen in parallel (steps 1 and 2 in Figure 1 above), and the write is confirmed only when both operations have completed (step 3), the effective front-end write latency (felt at the application-level) is impacted by the latency of the slower TLC media.

In this mechanism, one is left to wonder the rationale behind having a cache, since it brings no particular advantage.

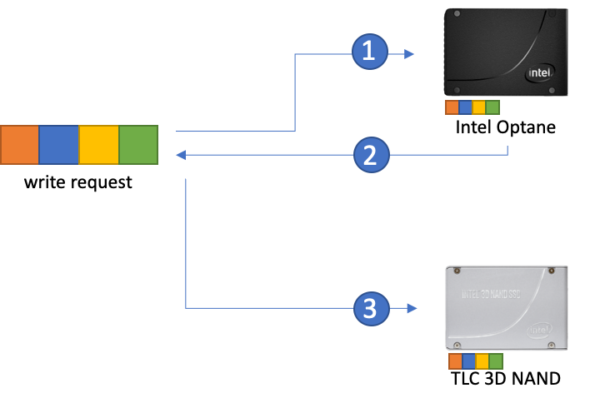

Write-Back Cache

This mechanism eliminates the latency issue of Write-Through Cache, but the data is written to a single media and is vulnerable.

Figure 2 – Write-Back Cache Mechanism – Data is written to an unprotected Optane drive (step 1) and the write is immediately acknowledged (step 2). After the acknowledgment, data is written to the TLC flash (step 3). Cached data is vulnerable to failure until step 3 is completed.

The challenge with Write-Back Cache, obviously, is that data is unprotected until after it has been effectively written to TLC media, which happens after the write is confirmed. This “YOLO” approach makes Write-Back Cache ill-suited for production-grade enterprise workloads.

Storage solutions that implement Write-Through or Write-Back cache configurations with Optane are unlikely to deliver any performance or cost gains. Write operations have a high-frequency profile and require media with adequate endurance. Affordable QLC media is optimized for reads and cannot sustain the write frequency associated with a cache. This forces TLC to be used as a companion of misfortune to Optane.

The result is an inefficient combination of underperforming Optane and expensive, wasted TLC media that would have been better used somewhere else. At best, customers get fooled by the presence of Optane as a marketing trick.

Optane in the Post-Cache Era

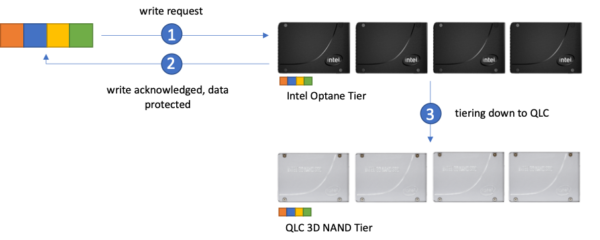

Modern storage architectures such as StorONE AFAn understand how Optane and QLC work hand-in-hand. By using Optane as a storage tier and not as a cache, data is immediately written to Optane, is protected against media failures, and can reside there as long as there is space within that tier. When the high watermark is hit, the AFAn tiering mechanism starts moving the oldest and least active data to the QLC tier by grouping data and performing large sequential writes.

Figure 3 – StorONE AFAn – Data is written to the highly available Optane storage tier (step 1), the write is immediately acknowledged (step 2) and data is already protected. When the high usage watermark is hit on the Optane tier, data is tiered down to the QLC tier (step 3).

Perhaps the key takeaway is to constructively question claims where Optane is positioned as a warranty seal for performance by storage vendors. Taken alone, Optane is undoubtedly the best flash media type currently available, but without an architecture built around it, Optane is just an expensive piece of hardware.