The Gestalt IT Field Day was a great success in bringing together a mixture of delegates from varying discplines. Following the presentations from 3Par and Symantec, there was heated debate about the implementation of Thin Provisioning and the ability to reclaim released storage resources. This post covers the basic concepts of Thin Provisioning and more importantly how deleted resources can be recovered over time.

Thin Provisioning Primer

The underlying concept of thin provisioning is pretty simple; provide storage resources to those requesting it only as they need it.

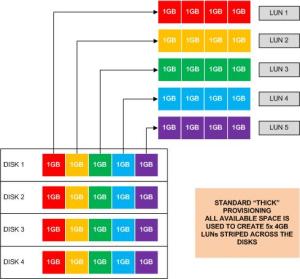

Think of a standard ‘thick provisioned’ environment. As thick LUNs are created, the storage is assigned and mapped to that LUN to the full extent of the size requested. See, the first graphic, which shows a RAID group of four 5GB drives. I’ve assumed “RAID-0″ here for simplification, i.e. no RAID overhead. Each LUN (coloured separately) is made up from a 1GB slice of the available disks. Thick provisioning is great if the LUNs are all 100% allocated. In that instance, 100% of the available physical space is used up. However, it is never the case that 100% of a LUN is used and so wastage exists.

Think of a standard ‘thick provisioned’ environment. As thick LUNs are created, the storage is assigned and mapped to that LUN to the full extent of the size requested. See, the first graphic, which shows a RAID group of four 5GB drives. I’ve assumed “RAID-0″ here for simplification, i.e. no RAID overhead. Each LUN (coloured separately) is made up from a 1GB slice of the available disks. Thick provisioning is great if the LUNs are all 100% allocated. In that instance, 100% of the available physical space is used up. However, it is never the case that 100% of a LUN is used and so wastage exists.

Look at the second graphic. This shows how thin provisioned LUNs work. As storage is requested by the LUN, the space is mapped to physical blocks of storage. In this  example, none of the logical LUNs are fully utilised and so don’t consume their full theoretical capacity. This means that the pool of space can be over-subscribed and a sixth new LUN created. Obviously there’s no such thing as a free lunch or infinite storage resources and in this example if a further five blocks are requested then physical space would be exhausted. The next request for a new storage block would result in an error situation and this represents the main concern with over-subscribing thin provisioned volumes.

example, none of the logical LUNs are fully utilised and so don’t consume their full theoretical capacity. This means that the pool of space can be over-subscribed and a sixth new LUN created. Obviously there’s no such thing as a free lunch or infinite storage resources and in this example if a further five blocks are requested then physical space would be exhausted. The next request for a new storage block would result in an error situation and this represents the main concern with over-subscribing thin provisioned volumes.

Now we get the concept of thin provisioning, there are a further two aspects to consider. Firstly, when we say a LUN isn’t 100% utilised, what to we mean? Second, how can deleted blocks be returned to our free physical pool?

As LUNs are presented to hosts, they are formatted with a file system, for example on Windows it’s NTFS; a VMware environment would use VMFS. The file system will have a standard layout which determines where the file index sits and the method in which files are allocated onto the disk. Have a look at the third graphic. This is a map of the C:\ drive for one of my servers. Each block represents approximately 22MB. You can clearly see the MFT (NTFS index) in the centre of the volume. A large percentage of the disk is unused. In a thin provisioned environment, storage would have been requested only for the blocks with valid data and in this way, a LUN can be less than 100% allocated.

As LUNs are presented to hosts, they are formatted with a file system, for example on Windows it’s NTFS; a VMware environment would use VMFS. The file system will have a standard layout which determines where the file index sits and the method in which files are allocated onto the disk. Have a look at the third graphic. This is a map of the C:\ drive for one of my servers. Each block represents approximately 22MB. You can clearly see the MFT (NTFS index) in the centre of the volume. A large percentage of the disk is unused. In a thin provisioned environment, storage would have been requested only for the blocks with valid data and in this way, a LUN can be less than 100% allocated.

OK, so what happens if I create some files then delete them on the file system? Most file systems remove a file by deleting the entry in the index rather than physically overwriting the file contents with binary zeros. This is quick and efficient (if not slightly unsafe security wise). The actual data isn’t overwritten and it is this ‘logical’ deletion that enables undelete utilities to work. The trouble is, most disk arrays are not file system aware and so can’t detect the logical deletion of a file. Those arrays that offer thin provisioning typically detect unwanted space by looking for blocks containing only ‘binary zeros’. This means simply deleting files will not release unused space back to the free block pool (except for one storage device I’ll discuss in more detail another time, that’s Drobo). Arrays which are capable of recovering unused space need to see data overwritten in order to recover it.

This (finally) brings us to the cookie analogy. Imagine cookies are my free pool blocks. There are a number of ways in which storage arrays operate in handling thin provisioning – different cookie monster personalities:

- The Greedy Cookie Monster; grabs all the cookies he thinks he might eat, but never eats all of them and never returns any – this is the thick provisioning model.

- The Selfish Cookie Monster; only grabs cookies as he gets hungry but if he doesn’t eat them immediately, doesn’t give them back – this is thin provisioning with no zero block reclaim. Eventually thin provisioning will become thick as all logical blocks in a LUN become mapped to physical storage.

- The Nice Cookie Monster; takes the cookies as he gets hungry but only returns uneaten cookies if asked – this is thin provisioning with manual zero block reclaim. A manual process is required to zero out the unused space and to return it to the free pool.

- The Saintly Cookie Monster; takes the cookes as he gest hungry and offers them back immediately he realises he can’t eat them – this is thin provisioning with automatic zero block or free space reclaim.

So, of the storage arrays out there offering thin provisioning, which fit the various Cookie Monster personality types? I’ll leave that for you to guess.